We have been using Python to analyze data in Jira. We have developed sprint metrics (we call them bucket metrics) based on Jira data as part of our engineering benchmarks application. The graphs we produce helps us during sprint retrospective meetings

Note: This post is written in continuation with the other blog posts on engineering team metrics

An example bucket metric

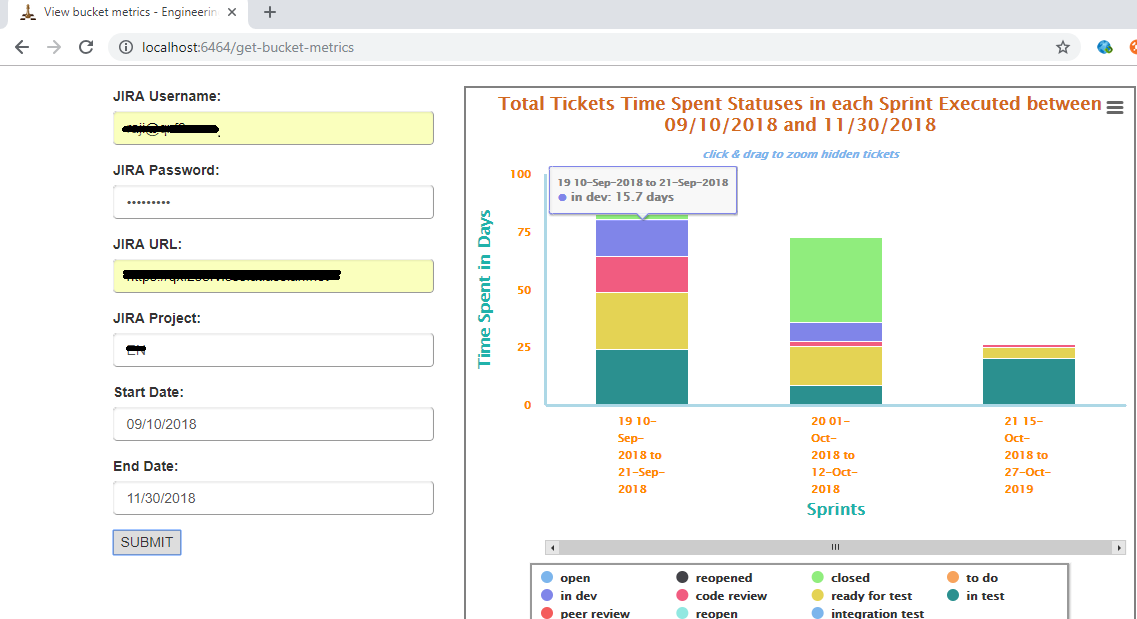

In this post, we will explore one bucket metric – a graph that shows you how much time your team spent during a sprint for each Jira status. As QA, you will find this very useful to combat the feeling that QA is the bottleneck. Often, the graphs have shown that a lot of time is spent on code-review and development and a relatively short amount of time is spent on testing. This bucket metric is graphical data of total tickets history for each sprint that are executed during the selected time period

Background

During the retrospective meetings, a lot of discussions happen based on team members feedback but we miss to address them after the meeting. We thought sprint metrics graph based on Jira sprint data would help us to bring the attention of the people and get the engineering team concerns addressed on time

Example bucket metric graph

Here is an extreme example from one of our projects that was coming to an end. On the X-axis, you see three sprints. On the Y-axis, you see the time spent in man-days.

You notice a few things immediately:

a) the amount of man-days spent is tapering indicating people are getting pulled out of the project

b) the amount of development + code review time spent is relatively low – which is a good sign because it implies the number of bug fixes and last minute changes is low

c) something probably went wrong in the middle sprint because the amount of time tickets spent in ‘ready for test’ was more than the actual testing done! It probably means the QA team was blocked by a fairly critical bug that caused development to jump in and fix something and also caused the QA to pause their testing.

Note: The explanation for the data is more mundane but you don’t need to know all the details of our internal work.

Bucket metrics: technical details

In a previous post, we showed you how to use Python to analyze Jira data. We will build upon that work. To follow along, you need to know we developed two important modules:

a) ConnectJira which contains wrappers for many Jira API calls. The sample code is here

b) JiraDatastore which saves individual ticket information as a pickled file. The sample code is here

In this post, we will introduce you to a backend module called ‘BucketMetrics.py’. As you see in the above screenshot, inputs for this metrics are Jira URL, authentication details, start date, and end date.

How did we logically break it down?

Here is how we went about analyzing this bucket metric from Jira.

1. Get Jira boards associated with the project

result = self.connect_jira_obj.get_jira_project_boards(self.project) jira_project_boards = result['jira_project_boards'] |

2. Get Jira Sprints associated with the board ids

#Get Sprints associated with the board Ids of the project result = self.connect_jira_obj.get_jira_project_sprints(jira_project_boards) |

3. Select sprints which are started or completed in the user given date range filter

if (end >= sprint_start >= start ) or (end >= sprint_end >= start): required_sprints.append({'name':sprint.name,'id':sprint.id}) |

4. Get sprint ticket list

result = self.connect_jira_obj.get_sprint_ticket_list(sprint_id) jira_tickets = result['sprint_ticket_list'] |

5. Get each status start and end dates for each ticket in the sprint

6. Calculate sprint days spent in each status of each ticket

7. Find the sum of days spent in sprint group by status

#Find the sum of days spent in sprint group by status sprint_days_spent_df = df.groupby('status',as_index=False).agg({'sprint_days_spent':'sum'}) |

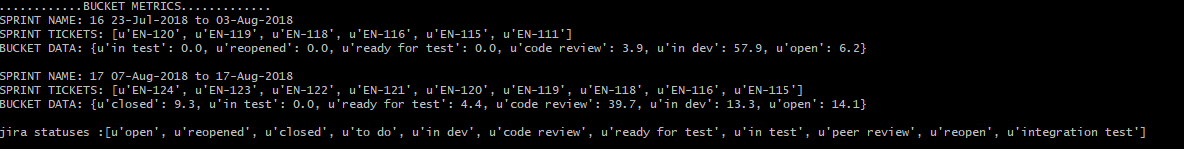

8. Bucket metrics backend data looks like below

9. Added GET api call to render the bucket metrics front end template

if request.method == 'GET': return render_template('get-bucket-metrics.html',title='View bucket metrics') |

10.Added POST api call to interact with the backend for the data processing and format the backend data as per the high chart heap map configuration requirement

#format the data for the high chart graph if error is None and len(bucket_metrics)>0: for status in statuses: sprint_data_series.append({'name':status,'data':[]}) for bucket in bucket_metrics: sprint_categories.append(bucket['sprint_name']) for key,val in bucket['bucket_data'].iteritems(): for data_series in sprint_data_series: if data_series['name'] == key: data_series['data'].append(val) api_response = {'sprint_categories':sprint_categories,'sprint_data_series':sprint_data_series,'statuses':statuses, 'error':error} return jsonify(api_response) |

11. We have used High charts JS library to generate column chart for the jira sprint/bucket metrics

src="https://code.highcharts.com/highcharts.js" src="https://code.highcharts.com/modules/heatmap.js" |

NOTE: While Qxf2 has the habit of open sourcing many of our R&D projects, we will not be open sourcing this code in the near future.

I have around 10 years of experience in QA , working at USA for about 3 years with different clients directly as onsite coordinator added great experience to my QA career . It was great opportunity to work with reputed clients like E*Trade financial services , Bank of Newyork Mellon , Key Bank , Thomson Reuters & Pascal metrics.

Besides to experience in functional/automation/performance/API & Database testing with good understanding on QA tools, process and methodologies, working as a QA helped me gaining domain experience in trading , banking & investments and health care.

My prior experience is with large companies like cognizant & HCL as a QA lead. I’m glad chose to work for start up because of learning curve ,flat structure that gives freedom to closely work with higher level management and an opportunity to see higher level management thinking patterns and work culture .

My strengths are analysis, have keen eye for attention to details & very fast learner . On a personal note my hobbies are listening to music , organic farming and taking care of cats & dogs.