Learn how to use database Testcontainers with your pytest code. In this post, we take a contrived but illustrative example to show you how to use a MySQL Testcontainer with pytest to test an algorithm that works on external data.

Background

Products that run algorithms/analytics on external data (e.g.: data quality tools, JIRA plugins, etc.) present a unique challenge when it comes to testing for regressions. We sometimes need to isolate the data between test cases. As an example, imagine that client A reports a bug (say Bug 1) and it gets fixed. Then a few weeks later client B reports another bug (say Bug 2) and that gets fixed too. But the fix for client B could have caused a regression for client A. The data used to reproduce Bug 1 and Bug 2 will likely not have any overlap. So when we test for regressions, we do need a way to have a test data for Bug 1 and another test data for Bug 2. In other words, we need test data to be isolated between two test cases.

Now, the “correct” approach would be to understand the root cause and create elegant data to reproduce the issue. But having worked in several early stage companies, we can confidently say that this rarely, if ever, happens. A simpler, but much dirtier (and lazier) solution would be capturing the data state that produced the bug and write a test for it. This approach has the drawback of having to maintain good test data. We find Testcontainers to be a good solution here – we can maintain the data easily, interact with the database via code, work with multiple versions of databases as well and additionally integrate well with the pytest workflow.

Note: This is a fairly advanced testing concept. If you are unsure about what has been said so far, it is safer to void the testing technique outlined in this post. Instead get someone to understand the root cause of the bugs and design elegant test data to catch the same.

A quick overview of Testcontainers

Testcontainers is a library that leverages the Docker engine to create disposable containers primarily for testing purposes. These containers can run instances of databases, Selenium web browsers, or any other services your application might need to interact with during tests. It provides lightweight instances of databases within Docker containers. This approach allows a isolated infrastructure for testing which is essential for achieving local testing. Each test interacts with a fresh instance of the database, ensuring test isolation. Another key benefit of using Testcontainers is its ability to automate the setup and cleanup of databases. Simply start your required services (databases, messaging systems etc.) as Docker containers using the Testcontainers API, run your tests and destroy the containers. In this article, we’ll delve into how Testcontainers helped us generate database instances and validate our data.

Our example

To make this post easy to follow along, we are taking up a trivial but illustrative example. Imagine you work at Great Expectations – a popular data quality tools. Great Expectations is used by many companies and provides open sourced ‘expectations’ (or rules) to check if their data looks like they expect. One such expectation is expect_table_row_count_to_be_between to verify that the number of rows in a table is between two values.

Now, as a tester, how do we go about testing this? We would obviously perform some boundary value analysis. For Eg:- For the case where the range is specified as between 5 and 10 rows, here is how you would incorporate boundary value testing, the primary focus will be on

In the following sections, we’ll explore these testing scenarios using Testcontainers

Setup and Configuration

To we begin, ensure that Docker is installed on the machine as TestContainers relies on Docker to run the containers. To install the TestContainers library, use the following pip command:

pip install testcontainers |

Database containers

In this section, we’ll explore how to set up disposable database containers for MySQL and PostgreSQL using Testcontainers. The aim is to configure disposable database containers that can interact correctly with our test scripts. This approach allows us to test against multiple database configurations and versions by simulating real-world scenarios in a controlled environment.

Pytest Fixtures

Fixtures in pytest are functions that run before (and sometimes after) the actual test functions. They are ideal for setting up necessary prerequisites for tests and for cleanup after the test run. Usually, fixture functions are defined in a file conftest.py so that tests from multiple modules can access the fixture function. In our code, we have not used conftest.py to keep the example short.

In our test setup, we have defined a pytest fixture (db_setup) which initializes the database containers. This function serves as a fixture that prepares the database environment for each test scenario. This involves starting new database containers for different database types and versions, creating database schemas, and inserting data based on the test parameters provided. We have used Session pytest fixture Scope here, you’ll have to think through which fixture scope best suits for your needs.

Here is the Fixture code:

@pytest.fixture(scope="session") def db_setup(request): connector, version, data_rows, min_value, max_value = request.param[:-1] # exclude the last item (expected result) expected_result = request.param[-1] # the last item is the expected result container_key = f"{connector}:{version}" metadata = MetaData() if container_key not in containers: # Select container based on connector type if connector == "mysql": container = MySqlContainer(f"{connector}:{version}") elif connector == "postgres": container = PostgresContainer(f"{connector}:{version}") containers[container_key] = container container.start() container = containers[container_key] url = container.get_connection_url() engine = create_engine(url) # Create table user_table = Table( 'user_data', metadata, Column('id', Integer, primary_key=True), Column('username', String(50)), Column('email', String(100)), Column('status', String(10)), extend_existing=True ) # create table metadata.create_all(engine) # Insert data into table with engine.begin() as connection: connection.execute(text("DELETE FROM user_data;")) for i in range(data_rows): connection.execute(user_table.insert(), {'username': f'user{i}', 'email': f'user{i}@example.com', 'status': 'active'}) yield min_value, max_value, url, eval(expected_result) # Cleanup metadata.drop_all(engine) |

This fixture extracts parameters such as connector, version, data_rows, min_value, max_value and expected_results from request.param. Lets take a look into the details of the Python code.

Metadata

Metadata is a collection that includes definitions of tables, columns and constraints. The complete detailed structure of the database is defined inside the metadata object.

metadata = MetaData() |

Creating Tables

Using the metadata, define the structure of a simple users_data table which will be used to insert and validate data

user_table = Table( 'user_data', metadata, Column('id', Integer, primary_key=True), Column('username', String(50)), Column('email', String(100)), Column('status', String(10)), extend_existing=True ) # create table metadata.create_all(engine) |

In the above code,

extend_existing=True parameter tells SQLAlchemy to use an existing table definition if it exists, otherwise it will create a new one based on the schema

metadata.create_all(engine) uses the metadata object to create tables

Inserting Data

This part of the code inserts user data based on the number of rows specified in the data_rows

with engine.begin() as connection: connection.execute(text("DELETE FROM user_data;")) for i in range(data_rows): connection.execute(user_table.insert(), {'username': f'user{i}', 'email': f'user{i}@example.com', 'status': 'active'}) |

The code within this block clears the existing data and inserts the specified number of rows into the table based on the data_rows parameter in our fixture.

Using SQL Dumps with TestContainers

For creating tables, the above function utilizes SQLAlchemy’s Table class defined within a MetaData collection. Instead of defining each table programmatically using the Table class, you can also use an SQL dump. Here is how you can integrate an SQL dump with a TestContainer using Docker volumes:

schema_directory = os.getenv('MYSQL_SCHEMA_PATH') |

def db_setup(request): connector, version, data_rows, min_value, max_value = request.param container_key = f"{connector}:{version}" if container_key not in containers: # Select container based on connector type if connector == "mysql": container = MySqlContainer(f"{connector}:{version}") schema_directory = os.getenv('MYSQL_SCHEMA_PATH') elif connector == "postgres": container = PostgresContainer(f"{connector}:{version}") schema_directory = os.getenv('POSTGRES_SCHEMA_PATH') containers[container_key] = container container.with_volume_mapping(schema_directory, "/docker-entrypoint-initdb.d/") container.start() |

Clean-Up Fixture

@pytest.fixture(scope="session", autouse=True) def cleanup_containers(): yield for container in containers.values(): container.stop() |

This ensures that all the container instances are properly stopped after the test session ends. This helps free up resources on Docker.

Simple Test

Our goal is to test the Great Expectations validation expect_table_row_count_to_be_between with different data variations. The test is to validate row count in the table matches the expected range specified by min_value and max_value. we need to test whether expectations handles lower and upper boundary correctly ensuring that the data validation logic detects data that falls below the acceptable limit.

Parameterized Test

Parameterized testing is a powerful technique used to execute the same test using different inputs. In our test setup, we are using pytest parameterized for passing inputs for different database versions and configurations to test different database interactions.

Parameterizing tests with various data sets is helpful to run the same test with different data sets. In our test setup, pytest paramterize structure looks like below.

@pytest.mark.parametrize("db_setup",[ ("mysql", "8.0.36", 4, 5, 10,"False"), ("mysql", "latest", 5, 5, 10, "True"), ("mysql", "8.0.36", 6, 5, 10, "True"), ("postgres", "9.5", 9, 5, 10, "True"), ("postgres", "9.5", 10, 5, 10, "True"), ("postgres", "latest", 11, 5, 10, "False") ],indirect=True) |

Here is the explanation of each parameter:

Testing with varying Data

We have tested with different data_rows values such as 4, 5, 6, 9, 10, and 11. Each of these tests explores how the expectation behaves with varying amounts of data. For instance:

These tests ensure that the algorithm/application can handle different data scenarios ensuring data validation logic is correctly implemented.

Testing with Database types

Testing with different database types is critical to make sure that validation logic is consistent across of database systems. In our testing, we have tested different database variants by providing different parameters. These parameters include different versions and types of databases, like MySQL and PostgreSQL (“mysql”, “8.0.36” and “postgres”, “latest”). This helps in selecting the appropriate Docker container or configuration for setting up the test database.

Here is our complete test definition

@pytest.mark.parametrize("db_setup",[ ("mysql", "8.0.36", 4, 5, 10,"False"), ("mysql", "latest", 5, 5, 10, "True"), ("mysql", "8.0.36", 6, 5, 10, "True"), ("postgres", "9.5", 9, 5, 10, "True"), ("postgres", "9.5", 10, 5, 10, "True"), ("postgres", "latest", 11, 5, 10, "False") ],indirect=True) def test_row_count_expectation(db_setup): # Define test parameters min_value, max_value, url, expected_result = db_setup # Initialize Great Expectations context context = ge.get_context() datasource_config = { "name": "my_mysql_datasource", "class_name": "Datasource", "execution_engine": { "class_name": "SqlAlchemyExecutionEngine", "connection_string": url }, "data_connectors": { "default_runtime_data_connector_name": { "class_name": "RuntimeDataConnector", "batch_identifiers": ["default_identifier_name"], }, }, } # Create or update datasource context.add_datasource(**datasource_config) batch_request = RuntimeBatchRequest( datasource_name="my_mysql_datasource", data_connector_name="default_runtime_data_connector_name", data_asset_name="user_data", runtime_parameters={"query": "SELECT * FROM user_data"}, batch_identifiers={"default_identifier_name": "default_identifier"}, ) # Create or update expectation suite expectation_suite_name = "test_suite" context.add_or_update_expectation_suite(expectation_suite_name="test_suite") # Get validator validator = context.get_validator( batch_request=batch_request, expectation_suite_name=expectation_suite_name ) # Run validation result = validator.expect_table_row_count_to_be_between(min_value=min_value, max_value=max_value) # Check the validation result and print messages accordingly if result.success == expected_result: print(f"Test passed: Expected {expected_result}, got {result.success}") else: print(f"Test failed: Expected {expected_result}, got {result.success}") assert False, f"Expected {expected_result}, got {result.success}" |

Test Results

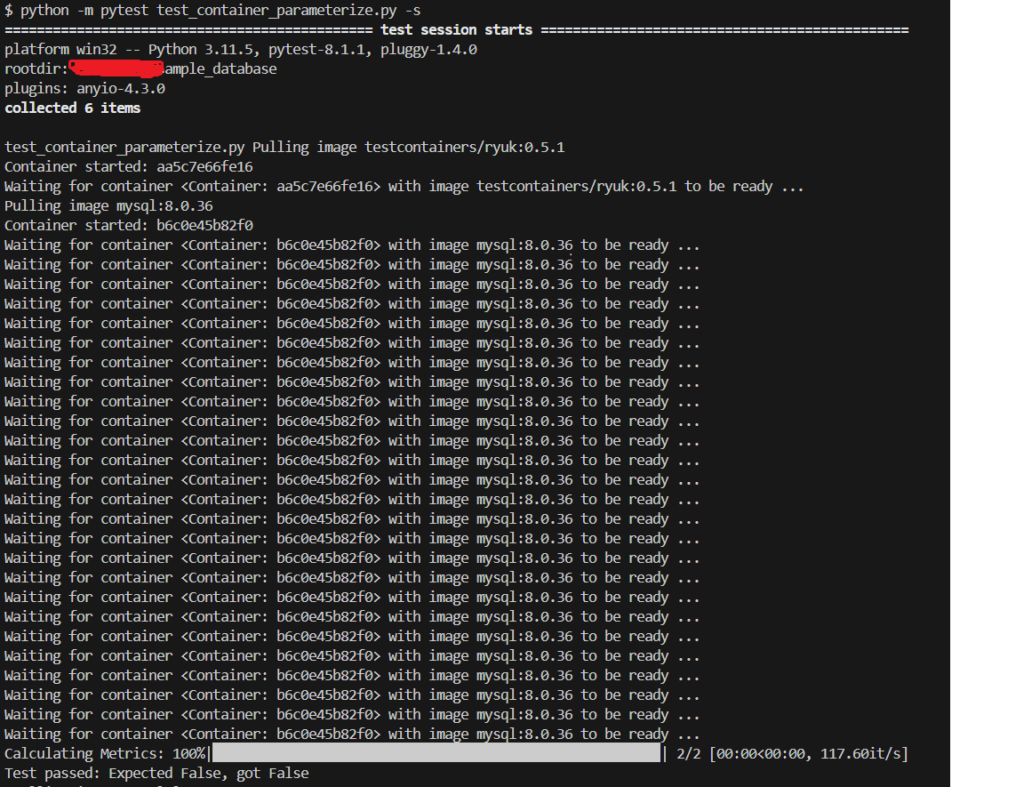

You can run the test using pytest command

python -m pytest test_container_parameterize.py -s |

The output:

A word of caution

This testing technique is NOT for everyone. We cannot say this enough because as experienced testers, we can easily imagine this technique being misused. You obviously need to have a good understanding how to test. But you should also have a sense of how hard it is to maintain data specific tests. For instance, creating test containers for small tests might introduce unnecessary overhead. So, understanding when and where to apply this technique is important for maintaining good testing practices. If you have never had to deal with such problems, we strongly recommend you find simpler solutions.

Hire technical testers from Qxf2!

Qxf2 is the home of technical testers. Our engineers have a diverse skill set and know several testing techniques that go well beyond test automation. We understand your product, the value it provides to your users and observe the tendencies of your engineering team. We then figure out which tests and testing techniques are suitable for your context. To hire testers from Qxf2, please get in touch.

References

1. Testcontainers – Getting Started

2. testcontainers-python — testcontainers 2.0.0 documentation

I am an experienced engineer who has worked with top IT firms in India, gaining valuable expertise in software development and testing. My journey in QA began at Dell, where I focused on the manufacturing domain. This experience provided me with a strong foundation in quality assurance practices and processes.

I joined Qxf2 in 2016, where I continued to refine my skills, enhancing my proficiency in Python. I also expanded my skill set to include JavaScript, gaining hands-on experience and even build frameworks from scratch using TestCafe. Throughout my journey at Qxf2, I have had the opportunity to work on diverse technologies and platforms which includes working on powerful data validation framework like Great Expectations, AI tools like Whisper AI, and developed expertise in various web scraping techniques. I recently started exploring Rust. I enjoy working with variety of tools and sharing my experiences through blogging.

My interests are vegetable gardening using organic methods, listening to music and reading books.