As testers, we are always looking for various tools that can help us enhance our testing. Our go-to tools are mostly defect tracking tools, exploratory testing of the product, and documentation. While these have been important, what if we could gain a fresh perspective by exploring the development activity? How might this aid us in aligning our testing efforts more effectively? To explore this, I delved into performing Git log analysis, uncovering insights that can significantly benefit our testing efforts. Although Git logs are primarily a developer’s domain, they provide valuable information for testers as well – from understanding the most modified files to gauging the frequency and size of commits, and many more.

Using Pydriller and the GitHub API, I built a framework to extract insights from any Git repository. The outputs provide meaningful inferences, shedding light on development patterns. In this blog post, I will share the collaborative efforts of my colleagues and me at Qxf2 in developing these insights from Git log analysis.

The code for this work is present in this GitHub repo.

Why Git logs?

Git logs track all changes in a repository, offering testing teams a deep dive into the development process. To make this data actionable, testers need essential details. We’ve developed insights for testing teams that can help with this. The insights include knowing frequently changed files, patterns in PR review times, author contributions, and merge activities. These offer a comprehensive view of the development landscape, enabling testers to prioritize testing efforts, enhance test case creation, improve communication with developers, and provide valuable feedback for code quality enhancement. The following sections showcase these insights.

Insights from Git log analysis

While developing insights, we tried to focus on producing inference that we can derive from the insight. Apart from just showing reports, we believed having a one or two liner summary which can help testers in their work.

Each of the insights take inputs like Start Date, End Date, GitHub repo to analyze. I have provided examples for each insight by using Qxf2’s Page Object Model framework.

1. Top Touched Files: Identify areas of the code that are changing frequently

Top Touched files offer insights into the most actively modified areas within the repository. Identifying these files becomes crucial in recognizing frequent code changes, which often highlight critical features or functionalities in demand. For testers, this information serves as a guide, allowing them to prioritize testing efforts and ensure comprehensive coverage in areas that matter most during the development process.

Let me share an example from my experience. I was working with a startup to help kickstart their automation journey. Exploratory testing and defect tracking were my initial go-to strategies. However, having insights into development activity would have been helpful. Utilizing the Top Touched files insight would have guided me to prioritize automating modules that were constantly evolving. Focusing on these dynamically changing parts not only catches defects early but also ensures the long-term effectiveness of automated tests as the application evolves. This firsthand experience highlights the practical impact and importance of the Top Touched files insight for testers.

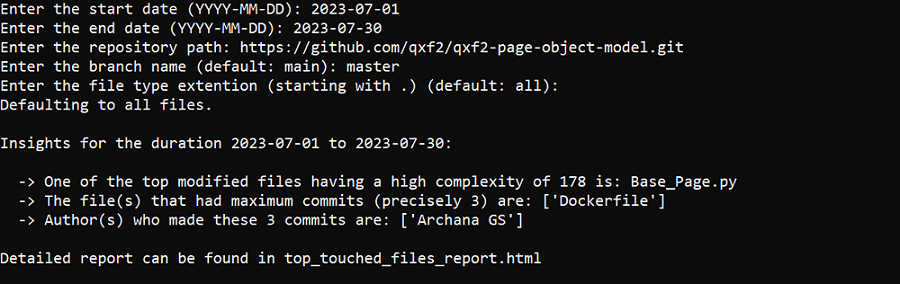

Here is a run of the insight. I have taken example of Qxf2’s Page Object Model framework.

Looking at the output, we find that ‘Base_Page.py’ has both high complexity and is frequently modified. Its frequent modifications and high complexity signal its significance in the codebase. This can serve as a good starting point, especially in scenarios like the one we discussed earlier while building an automation framework.

2. PR Review time: Comprehend development pace

Analyzing PR Review time provides a snapshot of the average duration for pull request evaluations. This insight is actionable for testers as it helps comprehend the development pace. For instance, recognizing the average time spent on reviews can help testers plan testing efforts effectively, ensuring alignment with the pace of code changes. It also aids in anticipating when new features or fixes might be ready for testing, contributing to better coordination between development and testing teams. On the other hand, extended review times directly impact the testing schedule. Testers can anticipate delays in receiving new features or fixes for testing, allowing them to adjust their testing plans accordingly. This proactive approach prevents unrealistic testing expectations and helps manage stakeholders’ timelines more effectively.

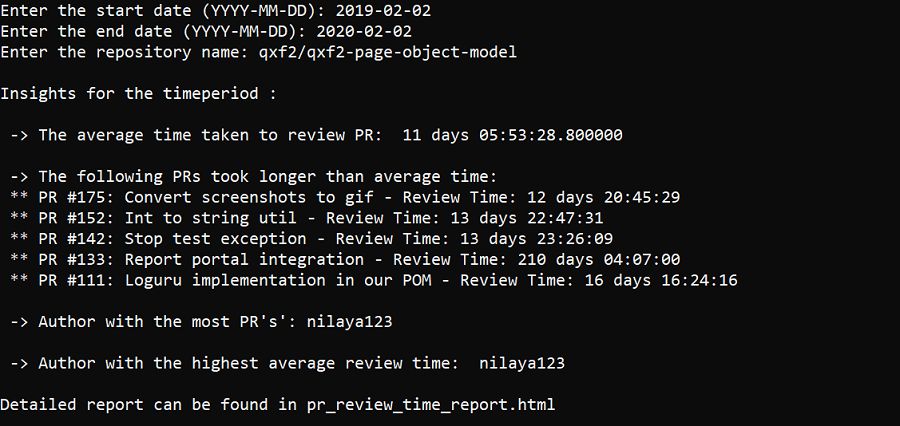

Here is a run of the insight:

Take, for instance, a scenario where a new tester joins the team. As they familiarize themselves with the development environment, understanding the PR Review time can help them gain insights into the team’s rhythm of code review and deployment. For example, if the PR Review time consistently indicates quick and efficient reviews, the new tester can proactively prepare for upcoming testing cycles, knowing that features and fixes are likely to be ready promptly.

3. Author Bias: Recognize code contribution patterns

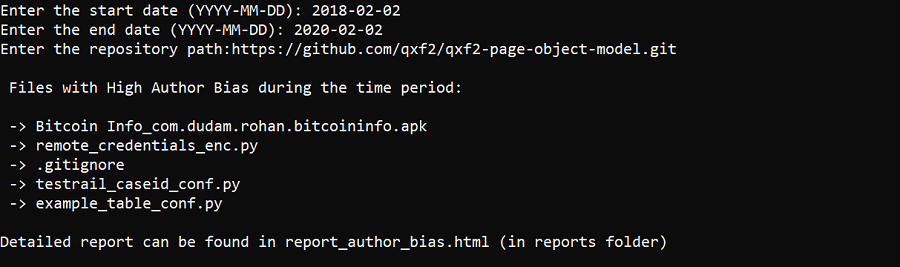

The Author Bias insight analyzes the distribution of contributions within files, quantified by Author Entropy. A higher value indicates evenly distributed contributions, while a lower value suggests concentration among one or a few authors. This analysis unveils potential patterns in the development team, offering insights into code contributions and authorship dynamics.

Files with high Author Bias may indicate areas where collaboration is limited. Testers, within their capacity, can underscore the importance of collective understanding, for example initiating discussions or knowledge-sharing sessions during team meetings. Also, in scenarios of attrition where concentrated contributions may lead to challenges when team members leave. Testers can anticipate potential gaps in understanding and documentation, facilitating a proactive approach to document critical code areas and ensure a smoother transition during team changes.

To calculate Author entropy, I referred to this paper.

Testers can consider these files as potential points of interest, indicating a higher likelihood of specific authors consistently contributing to these sections. While direct author details might not be available in the output, testers can explore these files to understand their complexity, potential risks, and whether collaborative efforts are required for comprehensive testing. This approach allows testers to proactively address challenges related to code understanding, knowledge distribution, and potential collaboration needs.

4. Size of PRs: Gauge the impact and complexity of code changes

The Size of PRs insight provides a detailed analysis of the quantity of changes introduced in pull requests over a specified period. While it’s important to note that the number of lines changed doesn’t inherently indicate complexity, it serves as a valuable metric for testers to gauge the scale of modifications. For instance, a PR with a substantial number of lines changed could involve widespread modifications.

Understanding the size of PRs allows testers to make informed decisions about the potential testing effort required. Testers can identify trends and patterns in PR sizes, helping them assess the impact on testing timelines and plan testing strategies accordingly. Rapid changes with smaller PRs might suggest a fast-paced development cycle, requiring quick testing turnarounds. On the other hand, infrequent but larger PRs may allow the tester to adopt a more thorough testing approach. This alignment ensures that the tester’s pace matches the overall development rhythm.

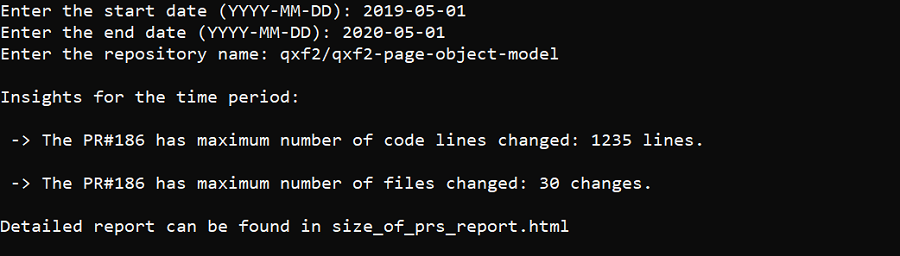

Here is a run of this insight.

So as testers such information might help tailor their testing approach accordingly. Larger PRs may introduce more extensive modifications necessitating thorough and comprehensive testing. And accordingly help testers to efficiently allocate resources, ensuring effective testing coverage based on the size and complexity of code modifications.

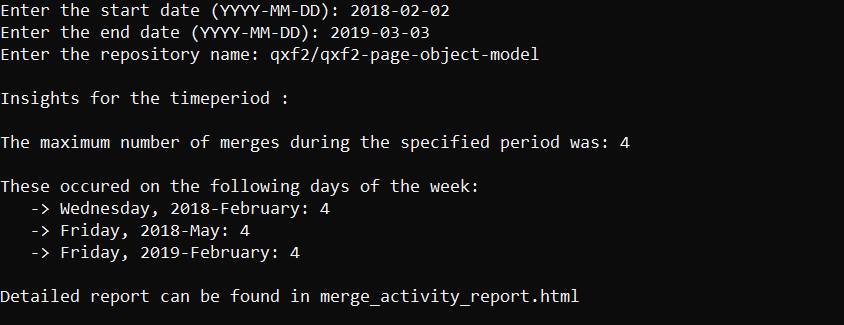

5. Merge Activity: Understand the rhythm of code merges

The Merge Activity insight provides a snapshot of the most active days for code merges in a given timeframe, offering insights into the frequency and timing of code integration within the development workflow. While not a game-changer, this insight equips testers with a basic understanding of the development pulse. It helps testers anticipate potential peaks in code changes, allowing for smoother collaboration with developers and more informed resource planning.

For instance, if Fridays consistently witness increased merge activity, testers might allocate more testing resources on these days to ensure prompt validation of new code changes. It’s a subtle insight that can aid in optimizing testing efforts to align with the team’s working patterns.

Executives can leverage the merge activity insight to gain a broad understanding of the team’s productivity trends. For example, observing a consistent surge in merges on specific days might prompt executives to align release cycles or team meetings accordingly, fostering a more streamlined development process.

Conclusion

To summarize, the insights derived from Git log analysis offer valuable glimpses into the development dynamics, providing testers with practical information to enhance their testing approaches. While not revolutionary, these insights equip testers with the awareness to adapt their strategies effectively. From prioritizing testing efforts based on frequently modified files to anticipating challenges in understanding code contributions, testers can leverage these insights to navigate the evolving codebase more efficiently. Whether identifying collaborative opportunities, mitigating risks related to knowledge distribution, or understanding the scope of code changes, these insights serve as practical tools for testers aiming to align their efforts with the pulse of development. While not a silver bullet, they certainly contribute to a more informed and proactive testing mindset, aiding testers in their day-to-day responsibilities.

Hire Qxf2!

Testers from Qxf2 are constantly seeking better solutions to everyday testing problems. The engineers at all our client engagement rave about how easy and nice it is to work with us. If you are looking for technical test engineers who go well beyond standard test automation, reach out to us. We understand that integrating good testing is a socio-technical problem. And like this post demonstrates, we have several ideas and solutions to help improve the testing IQ of engineering teams.

I have been in the IT industry from 9 years. I worked as a curriculum validation engineer at Oracle for the past 5 years validating various courses on products developed by them. Before Oracle, I worked at TCS as a Manual tester. I like testing – its diverse, challenging, and satisfying in the sense that we can help improve the quality of software and provide better user experience. I also wanted to try my hand at writing and got an opportunity at Qxf2 as a Content Writer before transitioning to a full time QA Engineer role. I love doing DIY crafts, reading books and spending time with my daughter.