This post extends my previous exploration of conducting data validation tasks using Large Language Models like ChatGPT. To provide context, at Qxf2, we execute a series of data quality tests using Great Expectations. Initially, we explored the possibility of employing ChatGPT for these validations, but it faced challenges in performing them effectively. Now, with the recent release of more advanced OpenAI models, I revisited this work, using GPT-4 for the validations. Unfortunately, I observed no improvement; it still struggled with numerical validations. In response, I turned to the Assistants API and created a new Assistant with Code Interpreter capability. This approach proved effective for most cases. In this blog post, I will share my experiments and observations, showing how we can use AI for performing data validation with the help of Assistants API.

Overview of Data Validation Use Cases

I worked on two specific use cases. The first involves identifying outliers in a dataset, while the second focuses on validating if all numbers in a dataset fall within the range of 0 and 1. These tests were executed on Qxf2’s public GitHub repositories data. For additional context, you can refer to my blog post, Data Validation using Great Expectations with a Real-World Scenario. This blog post is part of a series aimed at assisting testers in implementing practical tests with Great Expectations for effective data validation.

Challenges Faced with GPT-4

Before I show you how to perform data validation with the Assistants API, let me share my initial experience with the GPT-4 model (gpt-4-1106-preview). For this, I interacted directly with the model (just like you would in a browser) using a script. In my earlier work, I had developed this script. It accepts two inputs: the system prompt which is the high level instruction and input_message where I am passing the numerical dataset I wish to validate. To make it work with GPT-4, I revised the script. The code can be found here.

My first test was performing numerical validation. The objective was to verify that all the numbers in the provided dataset fell within the range of 0 and 1. The data for this comes from Qxf2’s GitHub repositories which comprised approximately 50 rows of floating-point numbers.

My system prompt looked like the following. I essentially adopted a prompt that had proven effective in my prior experiments and added more details to enhance its clarity and specificity. Also, with this latest model, I utilized the feature of returning response in the form of JSON. For that I needed to include the word JSON in the prompt.

def setUp(self) -> None: """ Set the system prompt for the LLM and get the dataset """ self.system_prompt= ''' You are an expert in numerical data validation. You will receive a dataset formatted as {"dataset": [list of numbers]}. The list of numbers are all float values. Your task is to analyze the dataset and determine its validity based on the following criteria: - All values in the dataset must be between 0.0 and 1.0 (inclusive). Return a JSON object with two keys: 1. "valid": true if the dataset meets the criteria, false otherwise 2. "failed_values": a list containing numbers that do not satisfy the condition that is numbers that are less than 0.0 or greater than 1.0 ''' |

I utilized pandas to read the input values from a CSV file. Afterward, I transformed the data into a list of numbers, and supplied it to the model for processing. The complete code can be found here.

Overview of Attempts

With the script in place, I proceeded to test the GitHub Scores values stored in a CSV file using the model. All these score values must lie between 0 and 1.

After just a couple of attempts, it became evident that the results from the model were still unsatisfactory and lacked reliability.

1. Happy Path – Unsuccessful

In the initial attempt, I provided a list of numbers where none fell below 0.0 or exceeded 1.0. Surprisingly, the model responded with a couple of numbers well within the 0.0 and 1.0 range.

Here is the response of the model.

GPT response: {

“valid”: false,

“failed_values”: [0.0148, 0.0135, 0.0205, 0.0604, 0.0146, 0.1452, 0.0085, 0.1096, 0.0174, 0.0115, 0.0166, 0.0073, 0.0378]

}

2. Two numbers Failing the Condition – Unsuccessful

Following that, I increased two of the numbers above 1.0, anticipating that the model would accurately identify them. Although it did catch those two, it also provided a few other numbers that it deemed to be above 1.0.

GPT response: {

“valid”: false,

“failed_values”: [1.0016, 1.0034, 0.1452, 0.1096, 0.0604, 0.0378]

}

3. Decreased Dataset Size – Unsuccessful

Suspecting that the large number of values might be a factor, I reduced the dataset to contain only half of the values. However, the outcome remained unchanged. It still provided values that it thought were above 1.0 even though they weren’t.

GPT response: {

“valid”: false,

“failed_values”: [1.0016, 0.0205]

}

From the above attempts, it becomes apparent that the model’s responses were neither accurate nor consistent.

I then opted to experiment with the Assistants API, allowing me to construct an AI assistant with Code Interpreter capability enabled. This approach yielded better results.

Introduction to Building AI Assistants

In this section, I will briefly outline the steps for building an AI Assistant using the Assistants API. The steps are as specified in the official guide. For performing the steps, I constructed a simple framework consisting of different classes designed to perform all the tasks related to Assistant AI. These include methods for creating and using Assistants, Threads, Runs etc. The framework code is present in this GitHub repo.

Utilizing this code, I proceeded to create Assistants for the two data validation use cases.

Assistant for Numerical Validation

To begin with, I created Assistant for performing numerical validation. The complete code snippet is here.

1. Create an Assistant in the API by enabling Code Interpreter

The initial step involves designing instructions for assistant creation. I used a similar prompt as before, adapting it to incorporate input from a CSV file. To enable the Code Interpreter functionality, I specified it through the tools parameter.

def create_assistant(): """ Create an OpenAI AI Assistant for performing numerical validation i.e checking whether all numbers in provided CSV file are between 0 and 1 """ name = "Numerical Validation Assistant" instructions = """ You are an expert in numerical data validation. You will be provided a CSV file having 3 columns - repo_score, date and repo_name. The values of the repo_score column are floating point numbers. Your task is to verify that all the numbers in this repo_score column meet the following condition: - All values must be between 0 and 1 (inclusive) i.e each value must be greater than or equal to 0.0 and less than or equal to 1.0 Return a JSON object with two keys: 1. "valid": true if the dataset meets the criteria, false otherwise 2. "failed_values": a list containing numbers along with repo names that do not satisfy the condition """ tools = [{"type": "code_interpreter"}] try: numerical_assistant = ASSISTANT_MANAGER.create_assistant(name, instructions, tools) print(numerical_assistant) except api_exception_handler.AssistantError as error: print("Error while creating Assistant:", error) return numerical_assistant |

I recorded the generated Assistant ID upon its creation and utilized it for further interactions with the Assistant.

2. Create a Thread

To converse with the Assistant, we need a Thread. By using the create_thread method, I created a Thread and captured the Thread ID.

try: thread = THREAD_MANAGER.create_thread() thread_id = thread.id print("\nCreated Thread: ", thread_id) except api_exception_handler.ThreadError as thread_creation_error: print("Error while creating thread", thread_creation_error) |

Once we have a Thread we can add messages to it. But before that, I had to upload the CSV file from which I want the Assistant to read the data.

3. Upload File

The FileManager class has the upload_file method which invokes the respective endpoint to upload the file. Subsequently, I captured the File ID which I will later pass it as part of the message.

try: file_id = FILE_MANAGER.upload_file(FILE_NAME) except api_exception_handler.FileError as file_upload_error: print("Error while uploading file: ", file_upload_error) |

4. Add messages to Thread

The next step involved adding a message containing the user question and including it in the recently created Thread. To ensure the CSV file’s accessibility at the Thread level, I included the uploaded file ID as well.

try: user_question = "Validate the provided CSV file and give out the results" message_details = MESSAGE_MANAGER.add_message_and_file_to_thread( thread_id=thread_id, content=user_question, file_id=file_id ) print("\nDetails of message added to thread: ", message_details) except api_exception_handler.MessageError as message_error: print("Error adding message to thread:", message_error) |

5. Run the Assistant on the Thread

Finally, a Run is initiated, allowing the Assistant to process the user question and generate a response. In this step, I supplied my Assistant ID and the Thread ID created earlier.

try: run_details = RUN_MANAGER.run_assistant(thread_id=thread_id, assistant_id=assistant_id) run_id = run_details.id print(f"\nStarted run (of {thread_id}) RunID: ", run_id) except api_exception_handler.RunError as run_error: print("Error while creating a run", run_error) |

I captured the run_details using which we can check the status of the Run.

6. Display the Assistant’s Response

Upon initiating the Run, I continuously monitored its status to determine if it had reached completion. Subsequently, I retrieved the response and displayed it on the console.

try: while True: # Intentional sleep for periodic status check (Temporary solution; not a standard coding practice) time.sleep(5) run_details = RUN_MANAGER.retrieve_run_status(thread_id=thread_id, run_id=run_id) print(f"\nStatus of the Run ({run_id}): ", run_details.status) if run_details.status == "completed": MESSAGE_MANAGER.process_message(thread_id) break print("\nWaiting for the Assistant to process the message") except api_exception_handler.RunError as run_error: print("Error while processing assistant response", run_error) sys.exit(1) except api_exception_handler.MessageError as message_error: print("Error while retrieving response", message_error) |

I tried different attempts (for data validation) with the Assistant, and for each one, I followed the above mentioned steps.

Attempts with the Numerical Validation Assistant

Here, I am listing the attempts I undertook for performing numerical validation using the created Assistant.

1. Happy Path – Successful

Initially, I provided the CSV file with all values satisfying the condition, i.e., falling between 0.0 and 1.0. The Assistant accurately confirmed that no values failed the check.

“`json

{

“valid”: true,

“failed_values”: []

}

“`

No values failed the validation check.

2. Two numbers Failing the Condition – Successful

Subsequently, I made two of the numbers above 1.0. The Assistant accurately identified them in this scenario.

the validation result:

“`json

{

“valid”: false,

“failed_values”: [

[1.2, “qxf2/FiscalNote”],

[1.0052, “qxf2/Flask_SES_Form”]

]

}

3. Boundary value test – Successful

Several values already had a score of 0. I modified a few others to be 1.0 and 0.0. The Assistant correctly confirmed that no values failed the condition.

object with the results:

“`json

{

“valid”: true,

“failed_values”: []

}

“`

There are no values that failed to meet the condition, hence the empty “failed_values” list. If you have any other requests or need further analysis, feel free to ask!

4. Negative number test – Successful

I conducted a test involving negative numbers, adjusting two of the values to have negative values. The Assistant successfully identified them.

criteria. There are entries with `repo_score` values outside the specified range.

Here’s the JSON object with the validation results:

“`json

{

“valid”: false,

“failed_values”: [

{“repo_score”: -0.0026, “repo_name”: “qxf2/42Floors”},

{“repo_score”: -0.0075, “repo_name”: “qxf2/GMail”}

]

}

“`

This indicates that the repository scores for “qxf2/42Floors” and “qxf2/GMail” are invalid as they are negative values.

5. Invalid values test – Successful

Lastly, I conducted a quick test to check if the Assistant could identify non-numeric values present in the column. I transformed two of the values into strings (“qxf2”), and the Assistant successfully identified them.

non-numeric or missing data. The condition for a valid range (0 to 1, inclusive) has not been met for these records.

Here is the JSON object indicating the validation result and the entries that failed the validation check:

“`json

{

“valid”: false,

“failed_values”: [

[null, “qxf2/what-is-confusing”],

[null, “qxf2/wtfiswronghere”]

]

}

“`

Please note that `null` in the JSON output represents the `nan` (Not a Number) values found in the `repo_score` column associated with their respective repository names

Encouraged by the positive outcomes, I proceeded to the next test, which involved outlier detection.

Assistant for Outlier Detection

The next scenario involved conducting outlier detection. Similar to the previous case, I formulated the instructions for creating the Assistant.

“You are an expert in outlier data validation. You will be provided a dataset with numbers (integer or floating point). Your task is to identify potential outliers in the dataset or distribution of numbers. Outliers are values that lie outside the overall pattern in a distribution. When asked question consisting of the dataset of numbers, identify the outliers and provide it to the user.”

def create_assistant(): """ Create an OpenAI AI Assistant for detecting outliers in a given dataset """ name = "Outler Detection Assistant" instructions = ''' You are an expert in outlier data validation. You will be provided a dataset with numbers (integer or floating point). Your task is to identify potential outliers in the dataset or distribution of numbers. Outliers are values that lie outside the overall pattern in a distribution. When asked question consisting of the dataset of numbers, identify the outliers and provide it to the user. ''' tools = [{"type": "code_interpreter"}] try: assistant = ASSISTANT_MANAGER.create_assistant(name, instructions, tools) except api_exception_handler.AssistantError as error: print("Error while creating Assistant:", error) return assistant |

The subsequent steps are similar to those taken for numerical data validation. It involves creating a Thread, adding messages to the Thread, initiating a Run, and retrieving the response.

The complete code can be found here.

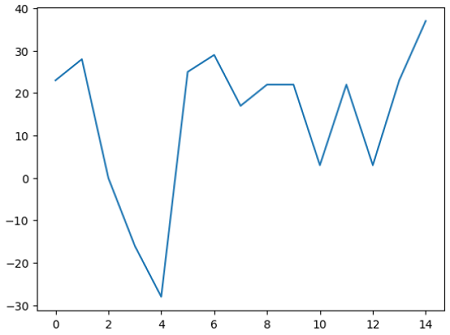

For this outlier detection use case, I varied the user questions with various datasets of numbers intended for outlier detection. These datasets are derived from my prior work with Great Expectations. To simulate outlier scenarios, I introduced spikes using synthetic data, covering different distributions.

Attempts with the Outlier Detection Assistant

Below, I will list the various distributions and outline the Assistant’s responses in detecting outliers in the datasets.

1. Single Spike Straight Line – Successful

The Assistant was correctly able to identify the outlier in the dataset. It gave explanation that it employed IQR method to calculate outliers.

[0, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218]

Expected Outliers:

[0]

User Question:

Identify the outliers in this dataset – [0, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218, 218]

Assistant Response:

The interquartile range (IQR) for the dataset is 0.0, as most of the data points have the same value (218). This results in the same value for Q1 and Q3, making IQR zero. According to the standard outlier detection method using IQR, any value that lies below the lower bound (218.0 – 1.5 * IQR) or above the upper bound (218.0 + 1.5 * IQR) should be considered an outlier. However, given that IQR is zero, the lower and upper bounds are both 218.0.

With this approach, the value [0] is identified as an outlier because it lies below the lower bound. All other values in the dataset are not considered outliers since they all equal the bound.

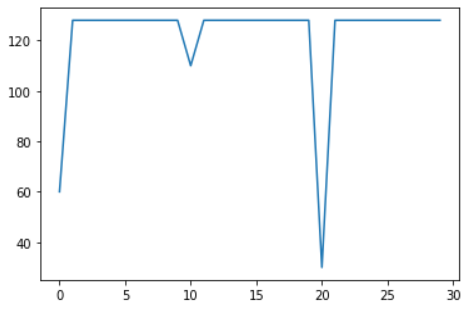

2. Sudden Downward Spikes – Successful

In this scenario, the Assistant successfully identified the outliers.

[60, 128, 128, 128, 128, 128, 128, 128, 128, 128, 110, 128, 128, 128, 128, 128, 128, 128, 128, 128, 30, 128, 128, 128, 128, 128, 128, 128, 128, 128]

Expected Outliers:

[110, 60, 30]

User Question:

Identify the outliers in this dataset – [60, 128, 128, 128, 128, 128, 128, 128, 128, 128, 110, 128, 128, 128, 128, 128, 128, 128, 128, 128, 30, 128, 128, 128, 128, 128, 128, 128, 128, 128]

Assistant Response:

The outliers in the given dataset are `[60, 110, 30]`. These values lie outside the overall pattern in the distribution based on the interquartile range (IQR) method.

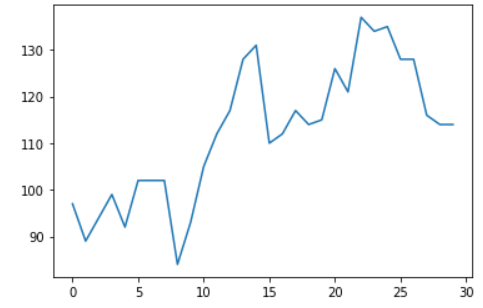

3. Real-time Data – Successful

Next, I used a portion of Qxf2’s production data – the visitors count for a particular GitHub repo. The Assistant was able to correctly identify that there were no outliers in this dataset.

[97, 89, 94, 99, 92, 102, 102, 102, 84, 93, 105, 112, 117, 128, 131, 110, 112, 117, 114, 115, 126, 121, 137, 134, 135, 128, 128, 116, 114, 114]

Expected Outliers:

[]

User Question:

Identify the outliers in this dataset – [97, 89, 94, 99, 92, 102, 102, 102, 84, 93, 105, 112, 117, 128, 131, 110, 112, 117, 114, 115, 126, 121, 137, 134, 135, 128, 128, 116, 114, 114]

Assistant Response:

After analyzing the dataset, there are no values that can be considered outliers based on the interquartile range (IQR) method. This means that all values fall within a reasonable spread around the median and do not deviate significantly enough from the middle 50% of the data to be labeled as outliers.

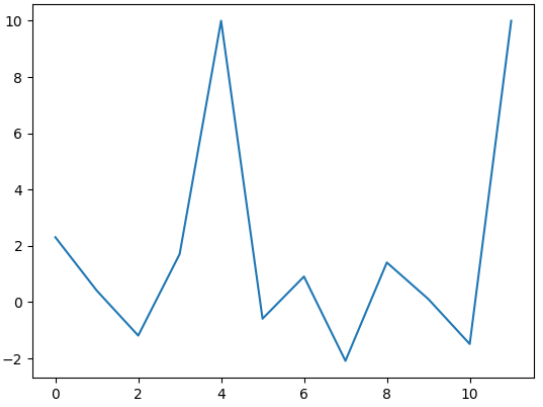

4. Normal Distribution with two spikes – Successful

I generated a distribution comprising negative floating-point numbers with two identical spikes. The Assistant successfully identified the outliers in this case.

[2.3, 0.4, -1.2, 1.7, 10, -0.6, 0.9, -2.1, 1.4, 0.1, -1.5, 10]

Expected Outliers:

[10, 10]

User Question:

Identify the outliers in this dataset – [2.3, 0.4, -1.2, 1.7, 10, -0.6, 0.9, -2.1, 1.4, 0.1, -1.5, 10]

Assistant Response:

Based on the IQR method, the lower bound for outliers in the dataset is approximately -4.65, and the upper bound is approximately 5.75. The potential outliers in the dataset are the values [10, 10] since they are above the upper bound.

5. Normal Distribution with a Single Outlier – Successful

The Assistant was able to identify the outlier here.

[2.1, 2.3, 2.5, 2.7, 2.9, 3.1, 3.3, 3.5, 3.7, 3.9, 4.1, 4.3, 4.5, 4.7, 4.9, 5.1, 5.3, 5.5, 5.7, 8]

Expected Outliers:

[8]

User Question:

Identify the outliers in this dataset – [2.1, 2.3, 2.5, 2.7, 2.9, 3.1, 3.3, 3.5, 3.7, 3.9, 4.1, 4.3, 4.5, 4.7, 4.9, 5.1, 5.3, 5.5, 5.7, 8]

Assistant Response:

The outlier in the dataset is the value 8.0.

6. Non skewed No Outlier – Successful

Next, I used a distribution which was non-skewed and had no outliers. The Assistant accurately confirmed that there were no outliers in this case.

[23, 28, 0, -16, -28, 25, 29, 17, 22, 22, 3, 22, 3, 23, 37]

Expected Outliers:

[]

User Question:

Identify the outliers in this dataset – [23, 28, 0, -16, -28, 25, 29, 17, 22, 22, 3, 22, 3, 23, 37]

Assistant Response:

There are no outliers in the dataset [23, 28, 0, -16, -28, 25, 29, 17, 22, 22, 3, 22, 3, 23, 37] based on the standard definition using the interquartile range (IQR).

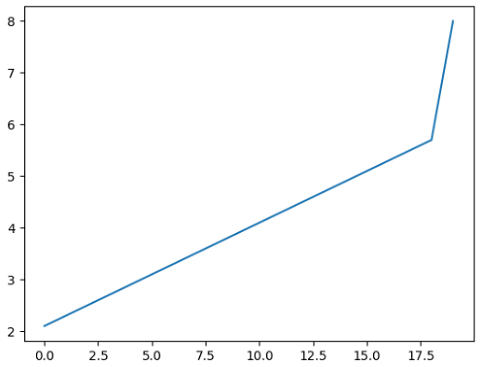

7. Straight Line with Gradual Downward Slope – Successful

The last one was a dataset consisted of a straight line that gradually descended. According to three different methods, namely Z-Score, IQR, and 2 Standard Deviations away from the mean, there were no outliers in this dataset. The Assistant concurred, stating that it couldn’t identify any outliers.

[128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 119, 111, 104, 97, 90, 84, 78, 73, 68, 64, 59, 55, 52, 48, 45]

Expected Outliers:

[]

User Question:

Identify the outliers in this dataset – [128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 128, 119, 111, 104, 97, 90, 84, 78, 73, 68, 64, 59, 55, 52, 48, 45]

Assistant Response:

Assistant: Based on the Interquartile Range (IQR) method for identifying outliers, there are no potential outliers in the provided dataset.

From the above attempts, we can observe that the Assistant was able to exhibit a good level of accuracy in identifying outliers in nearly all the cases. Performing Data Validation using Assistants API does show promise.

Conclusion

In summary, my exploration into data validation using Large Language Models revealed interesting findings. Despite initial challenges with models like GPT-4 in performing numerical validation, the introduction of the Assistants API, equipped with Code Interpreter capability, proved to be a significant advancement. It demonstrated commendable accuracy and consistency highlighting its potential as a tool for data validation tasks. My experiences and observations, shared in this blog post, aim to contribute to the broader conversation surrounding the integration of AI models into practical data testing scenarios, providing insights and considerations for fellow testers and researchers.

Hire technical QA from Qxf2

If your engineering team is struggling to find technical QA engineers, contact Qxf2. We have test engineers who go well beyond standard test automation. Many teams waste time writing tests that do not matter and eventually just give up. Hire us and our testers will lay the foundation for testing and enable your engineering teams write effective tests.

I have been in the IT industry from 9 years. I worked as a curriculum validation engineer at Oracle for the past 5 years validating various courses on products developed by them. Before Oracle, I worked at TCS as a Manual tester. I like testing – its diverse, challenging, and satisfying in the sense that we can help improve the quality of software and provide better user experience. I also wanted to try my hand at writing and got an opportunity at Qxf2 as a Content Writer before transitioning to a full time QA Engineer role. I love doing DIY crafts, reading books and spending time with my daughter.

[[..Pingback..]]

This article was curated as a part of #118th Issue of Software Testing Notes Newsletter.

https://softwaretestingnotes.substack.com/p/issue-118-software-testing-notes

Web: https://softwaretestingnotes.com