This post will discuss accessibility testing – specifically the portions of using Axe on your browser as well as integrating Axe with your automated test suite. We will also briefly discuss few nuances of introducing Accessibility testing into your team’s workflow. This post will NOT cover the basics of Accessibility tests, the standards used, etc.

Overview

Qxf2’s clients are startups and early stage products. They usually need quick “first versions” of different non-functional tests. The tests need to be implemented quickly and need to provide basic coverage. As the product matures, they hire more testers, increase coverage and implement better versions of the tests we put in place. In that context, Qxf2 has gotten experience implementing quick and dirty versions of performance tests, security tests and now, accessibility tests as well.

This post will map to our experience. It will serve as a short guide to help you go from a beginner at Accessibility testing to integrating Axe with your automated tests.

Part I: Pick a tool

In our exploration of accessibility tools for accessibility testing of both internal projects and at client end, we initially looked up for a suitable candidate for accessibility testing. We noticed that many of the tools like wave had one thing in common that they relied on using web URLs and lacked integration capabilities with automation suite which was a key requirement for us.

Narrowing down to Axe from deque

Our search for alternative option for accessibility led us to Axe, a renowned tool in the accessibility testing domain. What stood out about Axe was its capacity to seamlessly integrate with automation suites, making it a good choice for our accessibility testing needs. This also prompted us to delve deeper into Axe’s functionalities, intrigued by its potential to cater to both technical and non-technical users. We will now focus more into detailed exploration to understand how Axe precisely addresses our accessibility testing requirements.

While exploring Axe tool, we found that there were two ways how we can use Axe as a accessibility tool. One was through browser plugin and another was by integrating it to our automation suite. We tried both the way.

Part II: Starting with axe DevTools

axe DevTools is the browser extension to test the web application. It can be easily plugged with the browser. It can be installed from web store and than once installed will be available in the developers tool.

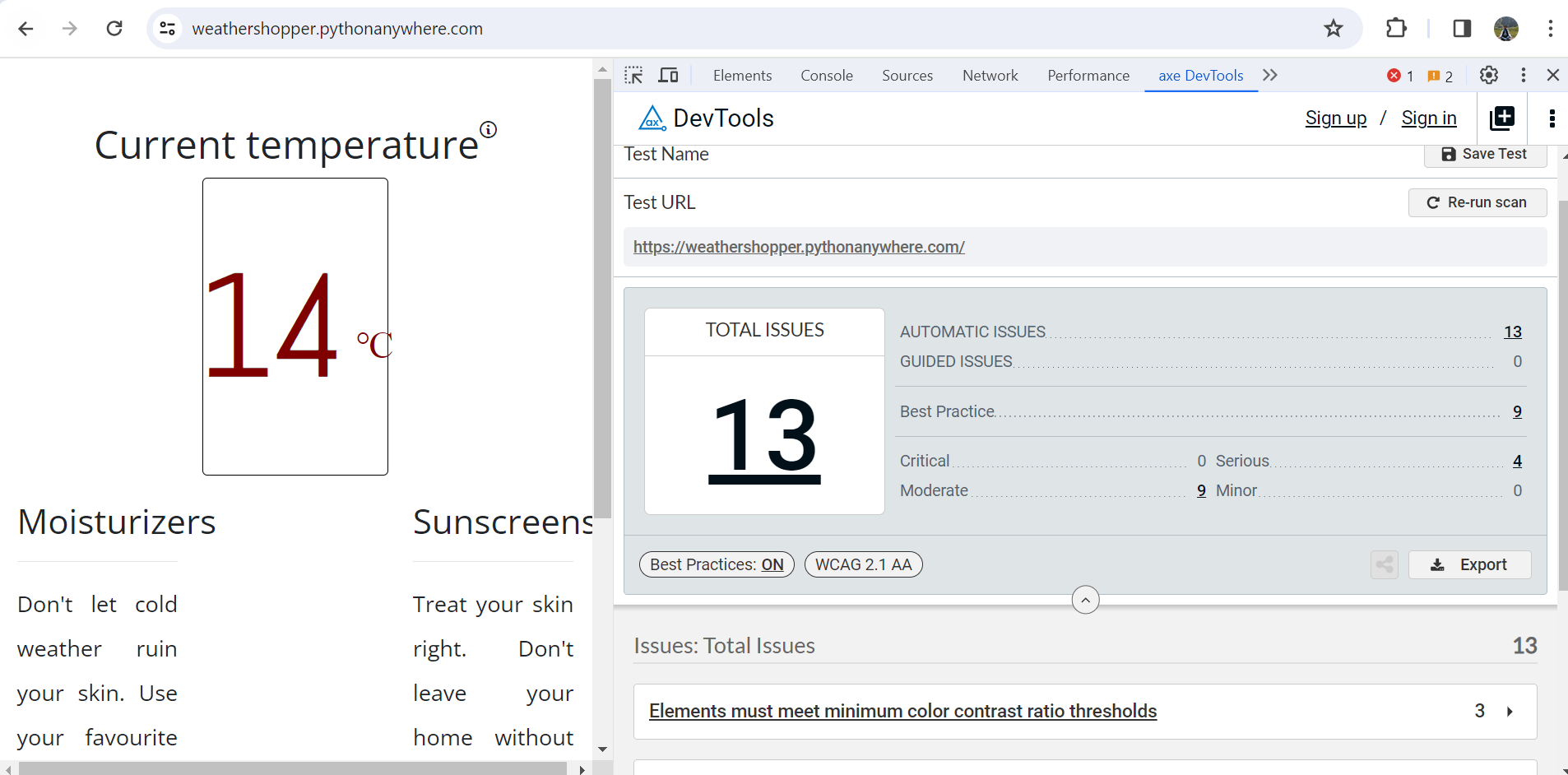

Scanning all pages at one go to get accessibility result

Once the axe DevTools is installed, we navigate to the web application to uncover the accessibility issues and open the developer tools. We click the axe Devtools which gives us two options:

1. Scan ALL of my page

2. Start an Intelligent Guided Test.

We first tried the Scan ALL of my page and in a jiffy, it listed the accessibility issues.

It also allows to export the result in JSON, CSV, or JUnit XML format.

Intelligent Guided Test

Intelligent guided test is a more advanced way of doing accessibility testing where using a simple question and answer format about the page and content under test, Intelligent guided test leverages machine learning to help you quickly find and fix issues that are not detectable by automated testing alone.

With Intelligent guided test, you can also make your testing more agile by focussing only on specific parts of the page like a table, images or forms.

We have focussed less on Intelligent guided test because we were more interested to get Axe integrated with automation suite and the axe DevTools extension gave us a good start.

Part III: Integrating Axe with test automation

In this section we will show you how to integrate Axe with your test automation framework. We will focus on integrating Axe with Qxf2’s test automation framework. But the steps remain similar for any framework of your choice.

1. Exploring axe-core

axe-core is the accessibility engine for automated Web UI testing and we will be using this as a part of our automation suite moving forward. Let’s see how we can use it.

Installation of axe-core

Installing axe-core is straight forward. To install Axe with selenium, use the pip package manager.

pip install axe-selenium-python |

Axe-core has different types of rules, for WCAG 2.0, 2.1, 2.2 on level A, AA and AAA along with best practices to identify common accessibility practices like ensuring every page have a h1 heading.

Content of the axe-core API

The axe-core API consists of:

axe.min.js – a minified version of the java script file to be used in our framework. Axe would inject this file into all frames for evaluating the contents inside that frame. This is the file which would help identify the problems related to accessibility.

2. Integration of axe-core with Qxf2 framework

As we started the integration, we thought it would be straight forward where we need to inject the JavaScript and run it on the pages and get the accessibility issues but there were few issues we hit along the way. We will discuss them, so that anyone facing this issue doesn’t spend much time debugging.

Include axe.min.js in your code base

In general there are two methods in Axe viz. inject and run which are often shown in online examples but when integrating within a framework we have to do a bit more than just using the methods directly. We dug a level deep and checked the constructor of the Axe class and realized that we were missing an important param called script_url. script_url will point to the file containing the axe.min.js which would be injected in the page. We had to put the axe.min.js in our framework and point the script_url to it before using any of the Axe methods. Once the script_url was included, we had to call the parent class constructor and use super() method to call the methods in the parent class i.e. Axe class.

import os from axe_selenium_python import Axe script_url=os.path.abspath(os.path.join(os.path.dirname(__file__), "..", "utils", "axe.min.js")) class Accessibilityutil(Axe): "Accessibility object to run accessibility test" def __init__(self, driver): super().__init__(driver, script_url) |

Write wrappers for the inject and run methods

The methods of our interest were inject() and run() in the parent class. We happened to use a wrapper around the methods as shown below.

def accessibility_inject_axe(self): "Inject Axe into the Page" try: return self.axe_util.inject() except Exception as e: self.write(e) def accessibility_run_axe(self): "Run Axe into the Page" try: return self.axe_util.run() except Exception as e: self.write(e) |

So, with this we were able to use the methods in our tests but there was a problem. When we were running the accessibility test, we were getting one test results for all the pages. That would be a mess for anyone to figure out which accessibility issue is for which page. Since, we will be dealing with multiple pages, we would need a accessibility report for each pages. To handle this, we have introduced a method in the PageFactory of our framework which would return all the pages. We than iterate over the pages in our tests, inject and run Axe and create a result for every page.

@staticmethod def get_all_page_names(): "Return the page names" return ["main page", "redirect", "contact page"] |

Example tests

This is how a part of test looks like by fetching the pages from PageFactory and iterating over each page and using Axe.

@pytest.mark.ACCESSIBILITY def test_accessibility(test_obj): "Inject Axe and create snapshot for every page" try: #Initalize flags for tests summary expected_pass = 0 actual_pass = -1 #Get all pages page_names = PageFactory.get_all_page_names() for page in page_names: test_obj = PageFactory.get_page_object(page,base_url=test_obj.base_url) #Inject Axe in every page test_obj.accessibility_inject_axe() #Check if Axe is run in every page run_result = test_obj.accessibility_run_axe() |

Now as we were able to run the accessibility tests with accessibility result for every page, we were thinking how the test result will be useful because whenever there is a change in the UI, it is most likely to introduce new accessibility issue but the test will run and capture the latest result and there is no way we can compare the change with last run or know what changed, or worst even the change is acceptable or not. Looking up on this, we came across snapshots which would allow us to have a snapshot for reference and compare the result for each test run.

3. Using snapshot testing against Axe result

So, using snapshot was simple. We had to install a plugin for snapshot testing with pytest.

pip install pytest-snapshot |

We created a class that calls the parent Snapshot class using the super() method.

import conf.snapshot_dir_conf from pytest_snapshot.plugin import Snapshot snapshot_dir = conf.snapshot_dir_conf.snapshot_dir class Snapshotutil(Snapshot): "Snapshot object to use snapshot for comparisions" def __init__(self, snapshot_update=False, allow_snapshot_deletion=False, snapshot_dir=snapshot_dir): super().__init__(snapshot_update, allow_snapshot_deletion, snapshot_dir) |

To generate snapshot for the first time, use the following command-line argument while running the test script:

python -m pytest tests/test_accessibility.py --snapshot-update |

Passing the above command-line argument while running the test script creates a snapshot directory along with the snapshot file for the first time. If the snapshots already exist, it updates them with the latest copy of Axe violations in the test pages.

Using DeepDiff library for snapshot comparisons

We use the ‘compare_and_log_violation()’ method to compare the current test results with existing snapshots using the DeepDiff library. If no new violations are found while comparing against the baseline snapshot, the test passes. If violations are resolved or new violations are found, they are logged in the console and in a separate file located in ‘./conf/new_violations_record.txt’.

# Use deepdiff to compare the snapshots violation_diff = DeepDiff(existing_snapshot_dict, current_violations_dict, ignore_order=True, verbose_level=2) |

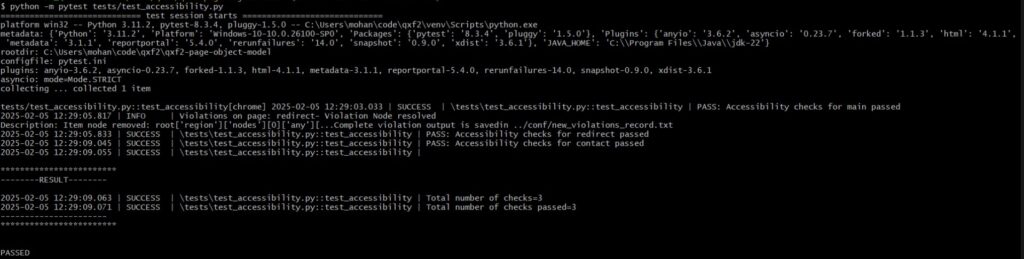

So, with the above methods of Snapshot class, the test script runs accessibility checks on each page, compares the results with stored snapshots, and logs any changes found.

Here is the complete test script of Axe accessibility testing.

@pytest.mark.ACCESSIBILITY def test_accessibility(test_obj, request): "Test accessibility using Axe and compare snapshot results and save if new violations found" try: #Initalize flags for tests summary expected_pass = 0 actual_pass = -1 #Get snapshot update flag from pytest options snapshot_update = request.config.getoption("--snapshot_update") #Create an instance of Snapshotutil snapshot_util = Snapshotutil(snapshot_update=snapshot_update) #Set up the violations log file violations_log_path = snapshot_util.initialize_violations_log() snapshot_dir = conf.snapshot_dir_conf.snapshot_dir #Get all pages page_names = conf.snapshot_dir_conf.page_names for page in page_names: test_obj = PageFactory.get_page_object(page,base_url=test_obj.base_url) #Inject Axe in every page test_obj.accessibility_inject_axe() #Check if Axe is run in every page axe_result = test_obj.accessibility_run_axe() #Extract the 'violations' section from the Axe result current_violations = axe_result.get('violations', []) # Log if no violations are found if not current_violations: test_obj.log_result( True, positive=f"No accessibility violations found on {page}.", negative="", level='info' ) #Load the existing snapshot for the current page (if available) existing_snapshot = snapshot_util.initialize_snapshot(snapshot_dir, page, current_violations=current_violations) if existing_snapshot is None: test_obj.log_result( True, positive=( f"No existing snapshot was found for {page} page. " "A new snapshot has been created in ../conf/snapshot dir. " "Please review the snapshot for violations before running the test again. " ), negative="", level='info' ) continue #Compare the current violations with the existing snapshot to find any new violations snapshots_match, new_violation_details = snapshot_util.compare_and_log_violation( current_violations, existing_snapshot, page, violations_log_path ) #For each new violation, log few details to the output display if new_violation_details: snapshot_util.log_new_violations(new_violation_details) #Log the result of the comparison (pass or fail) for the current page test_obj.log_result(snapshots_match, positive=f'Accessibility checks for {page} passed', negative=f'Accessibility checks for {page} failed', level='debug') #Print out the result test_obj.write_test_summary() expected_pass = test_obj.result_counter actual_pass = test_obj.pass_counter except Exception as e: test_obj.log_result( False, positive="", negative=f"Exception when trying to run test: {__file__}\nPython says: {str(e)}", level='error' ) assert expected_pass == actual_pass, f"Test failed: {__file__}" |

This is how a typical Axe test result looks.

Part IV: Running Accessibility test as a part of CI

As part of our Newsletter Automation project, we have integrated accessibility tests into our GitHub workflow. In addition to running API and UI tests as part of CI, we wanted to include accessibility tests as well. However, we debated where these tests should fit—before or after functional tests? The decision largely depended on the project’s priorities and requirements. While we are still evaluating the best placement, we have currently positioned them before functional tests.

The next problem we were getting was the frequent failure of accessibility tests. This would be a common phenomena as the web under test would evolve and there would be frequent UI changes but we don’t want the CI to fail, stop and not execute the other tests because our accessibility tests failed.

So, we used a parameter which would still allow us to execute the next tests after accessibility if it still fails. Below is the snippet of how we used if: always() in UI tests which would run after accessibility tests even if the accessibility tests fail.

- name: Run Accessibility Tests

run: |

cd tests/integration/tests/accessibility_tests

python -m pytest test_accessibility.py --browser headless-chrome --app_url http://localhost:5000

- name: Run UI Tests

if: always()

run: |

cd tests/integration/tests/ui_tests

python -m pytest -n 4 --browser headless-chrome --app_url http://localhost:5000 |

For more details, you can refer to our GitHub workflow file here by clicking this link.

Part V: Introducing Accessibility tests to a team

Now you know how to add Accessibility testing to your test suite. While the technical side of things look simple, there are problems you need to handle within your team. When you run accessibility tests for the first time, expect a lot of errors to show up. Your team is not going to be able to address everything. In fact, they might choose to address only the critical misses. So where does that leave us with CI and automation? Should the automated tests (and therefore the CI pipeline) fail all the time? Probably not. You do not want folks ignoring test results “because the tests are expected to fail”. Here is where your skill as a tester comes. You need to work out a feasible path with your team. Some sort of policy/agreement where the team commits to a timeline after which your automated accessibility tests will run. In the meantime, they can use your automated tests to run against their local machines when they make improvements. Further, you can also have policies in your CI to make sure no new accessibility issues are being introduced.

Conclusion

This post was meant to help testers quickly produce some accessibility testing results. We would strongly encourage you to read more about Accessibility standards and how to introduce them within the context of your team.

Hire technical testers from Qxf2

Qxf2 collaborates with small teams and nascent products. Our testing professionals possess technical acumen and a broad understanding of contemporary testing methodologies. We surpass conventional test automation by extending our testing proficiency to unconventional areas where testers typically don’t contribute value. If you’re seeking technical testers for your team, reach out to us today.

My journey in software testing began with Calabash and Cucumber, where I delved into Mobile Automation using Ruby. As my career progressed, I gained experience working with a diverse range of technical tools. These include e-Discovery, Selenium, Python, Docker, 4G/5G testing, M-CORD, CI/CD implementation, Page Object Model framework, API testing, Testim, WATIR, MockLab, Postman, and Great Expectation. Recently, I’ve also ventured into learning Rust, expanding my skillset further. Additionally, I am a certified Scrum Master, bringing valuable agile expertise to projects.

On the personal front, I am a very curious person on any topic. According to Myers-Briggs Type Indicator, I am described as INFP-T. I like playing soccer, running and reading books.

[[..Pingback..]]

This article was curated as a part of [#119th Issue of Software Testing Notes Newsletter.](https://softwaretestingnotes.substack.com/p/issue-119-software-testing-notes)

Web: https://softwaretestingnotes.com