You can now create asynchronous API automation test using Qxf2’s API Automation Framework.

In this post we will go about explaining:

– Why we need Async?

– How we modified our Synchronous framework to support Asynchronous HTTP calls

– How to create an Async API Automation test using our framework

– Cases where Async is not the right fit

Note: This post is mostly about how a few changes to our framework helped make it support running tests asynchronously but if you have not used our API framework and have no idea on how it works you can still follow along to know how to create non-blocking functions for blocking functions and how to create coroutine test functions for pytest.

Why Async?

Modern computers equipped with multi cpu cores can perform multiple tasks at the same time. When running an I/O bound task computers sometime wait on the I/O operation idly. Async helps reduce this idle wait time by running another task during the wait. Running steps asynchronously in most cases can also help reduce execution time drastically. When we ran 300 API requests synchronously and asynchronously using our framework, we noticed the asynchronous steps ran more than 10x faster. We find making asynchronous calls for setup steps to be especially useful.

2024-08-28 14:44:12 | WARNING | tests.test_api_sync_example| The total number of sync requests is 300 2024-08-28 14:44:12 | WARNING | tests.test_api_sync_example| The time taken to complete sync call is 90.46587109565735 2024-08-28 14:44:13 | WARNING | test_api_async_example | The total number of async requests is 300 2024-08-28 14:44:13 | WARNING | test_api_async_example | The time taken to complete async call is 7.610826253890991 |

Changes we made to our Framework:

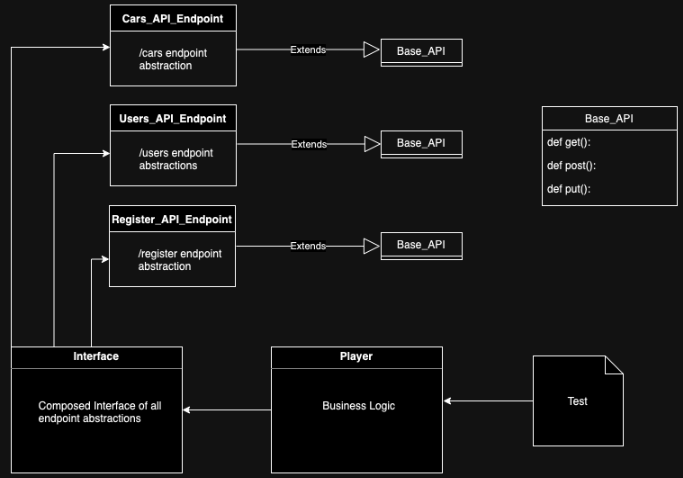

We have added an image below implementing our framework against a sample Cars-API application to show you have our Player-Interface API Automation framework works.

The following layers form the crux of the framework:

1. Endpoints layer

2. Interface layer

3. Player layer

The API endpoints in the application are abstracted in the Endpoints layer(all the *_API_Endpoints.py modules), the Interface layer(the API_Interface.py module) collects all the API endpoint abstractions & the Player layer(API_Player.py module) houses the business logic to validate the application.

The Base_API file that the endpoints abstractions extend to make the HTTP calls using requests module only used blocking functions to make GET, POST, PUT & DELETE requests so to start supporting Async API automation test we added non blocking functions to make those requests.

Blocking GET HTTP method:

def get(self, url, headers=None): "Run HTTP Get request against an url" headers = headers if headers else {} response = None try: response = requests.get(url=url,headers=headers) except Exception as generalexcep: print(f"Unable to run GET request against {url} due to {generalexcep}") return response |

Disclaimer: The code in this blog post is intended for demonstration purposes only. We recommend following better coding standards for real-world applications.

Non-blocking GET HTTP method for the previous synchoronous method:

async def async_get(self, url, headers={}): "Run the blocking GET method in a thread" response = await asyncio.to_thread(self.get, url, headers) return response |

Although running code on a separate thread is not asynchronous but using asyncio.to_thread allows us to await on the result asynchronously.

We used asyncio.to_thread to add similar non-blocking functions for the corresponding blocking functions in the *_API_Endpoints, API_Player modules too.

Create an Async API Automation test

A simple test cannot be used to call the coroutines we added in the API_Player module. The test function needs to be a couroutine too. pytest-asyncio plugin allows creating coroutine test functions.

@pytest.mark.asyncio async def test_api_async_example(test_api_obj): "Run api test" |

The @pytest.mark.asyncio is required for pytest to collect the coroutine as a test.

The test scenarios are then added as tasks inside the coroutine test function, we used asyncio.TaskGroup object to group the test scenarios and run them asynchronously.

async with asyncio.TaskGroup() as group: get_cars = group.create_task(test_api_obj.async_get_cars(auth_details)) add_new_car = group.create_task(test_api_obj.async_add_car(car_details=car_details, auth_details=auth_details)) get_car = group.create_task(test_api_obj.async_get_car(auth_details=auth_details, car_name=existing_car, brand=brand)) |

Putting it all together, this is how our sample Async API Automation test for the Cars-API app looks:

""" API Async EXAMPLE TEST This test collects tasks using asyncio.TaskGroup object \ and runs these scenarios asynchronously: 1. Get the list of cars 2. Add a new car 3. Get a specifi car from the cars list 4. Get the registered cars """ import asyncio import os import sys import pytest sys.path.append(os.path.dirname(os.path.dirname(os.path.abspath(__file__)))) from conf import api_example_conf @pytest.mark.asyncio # Skip running the test if Python version < 3.11 @pytest.mark.skipif(sys.version_info < (3,11), reason="requires Python3.11 or higher") async def test_api_async_example(test_api_obj): "Run api test" try: expected_pass = 0 actual_pass = -1 # set authentication details username = api_example_conf.user_name password = api_example_conf.password auth_details = test_api_obj.set_auth_details(username, password) # Get an existing car detail from conf existing_car = api_example_conf.car_name_1 brand = api_example_conf.brand # Get a new car detail from conf car_details = api_example_conf.car_details async with asyncio.TaskGroup() as group: get_cars = group.create_task(test_api_obj.async_get_cars(auth_details)) add_new_car = group.create_task(test_api_obj.async_add_car(car_details=car_details, auth_details=auth_details)) get_car = group.create_task(test_api_obj.async_get_car(auth_details=auth_details, car_name=existing_car, brand=brand)) get_reg_cars = group.create_task(test_api_obj.async_get_registered_cars(auth_details=auth_details)) test_api_obj.log_result(get_cars.result(), positive="Successfully obtained the list of cars", negative="Failed to get the cars") test_api_obj.log_result(add_new_car.result(), positive=f"Successfully added new car {car_details}", negative="Failed to add a new car") test_api_obj.log_result(get_car.result(), positive=f"Successfully obtained a car - {existing_car}", negative="Failed to add a new car") test_api_obj.log_result(get_reg_cars.result(), positive="Successfully obtained registered cars", negative="Failed to get registered cars") # write out test summary expected_pass = test_api_obj.total actual_pass = test_api_obj.passed test_api_obj.write_test_summary() # Assertion assert expected_pass == actual_pass,f"Test failed: {__file__}" except Exception as e: test_api_obj.write(f"Exception when trying to run test: {__file__}") test_api_obj.write(f"Python says: {str(e)}") |

We have used @pytest.mark.skipif marker to skip running the test for Python versions less than 3.11, the TaskGroup object api is available on versions 3.11 and later.

Scenarios where Async API test is not the right choice

Though Async API Automation test can help validate an app and reduce the time it takes to run the validations, there are cases where making HTTP calls asynchronously might not be the right approach. Here are a few scenarios where we think synchronous test is a better choice:

– Test where a few scenarios need to run in an order are not a right fit for asynchronous testing, because the order of execution of the coroutines collected as tasks in the test cannot be controlled, hence is susceptible to race condition.

– Test with very few scenarios are better off being run synchronously, because the overhead required to collect and run very less scenarios asynchronously minimises its advantage.

– Projects that cannot be maintained regularly. The asyncio Python module has gone through drastic changes with each Python version and hence warrant frequent maintenance.

References

1. How do you test APIs that use asynchronous communication?

2. Using asyncio.to_thread to create non blocking functions

3. pytest-asyncio

4. asyncio Task Groups

Hire testers from Qxf2

We at Qxf2 services make a dedicated effort to constantly evaluate our Automation framework against new frameworks to identify and implement new useful features. You can find our open-source framework on GitHub here – Qxf2 Page Object Model Framework.

Our expertise extend beyond maintaining our own framework, we have used various other frameworks at clients and even helped maintain a few of them. If you are looking for testers to help create automation tests using our framework or maintain your own automation framework contact Qxf2.

My expertise lies in engineering high-quality software. I began my career as a manual tester at Cognizant Technology Solutions, where I worked on a healthcare project. However, due to personal reasons, I eventually left CTS and tried my hand at freelancing as a trainer. During this time, I mentored aspiring engineers on employability skills. As a hobby, I enjoyed exploring various applications and always sought out testing jobs that offered a good balance of exploratory, scripted, and automated testing.

In 2015, I joined Qxf2 and was introduced to Python, my first programming language. Over the years, I have also had the opportunity to learn other languages like JavaScript and Shell scripting (if it can be called a language at all). Despite this exposure, Python remains my favorite language due to its simplicity and the extensive support it offers for libraries.

Lately, I have been exploring machine learning, JavaScript testing frameworks, and mobile automation. I’m focused on gaining hands-on experience in these areas and sharing what I learn to support and contribute to the testing community.

In my free time, I like to watch football (I support Arsenal Football Club), play football myself, and read books.