For a long time, Qxf2’s API tests were not really object-oriented. We were relying on (at best) a facade pattern or (at worst) the God class anti-pattern. Our colleague, David Schwartz of Secure Code Warrior, helped us make our API automation framework more object-oriented and easier to maintain. Thanks, Dex, for all the guidance, examples, code, code reviews and for helping us improve our API tests!

We have not yet managed to implement everything we learned from Dex. By no means we are claiming this is a good, ‘final version’! But we felt our API automation framework had improved enough to share at least a skeleton. We genuinely feel that you could benefit from seeing the code at this stage and then improve from here.

For this example of Player-Interface layer based API Automation Framework, we have written a sample API application called cars_app.py using Python Flask and created Python based automation tests. That way, you can follow along easily.

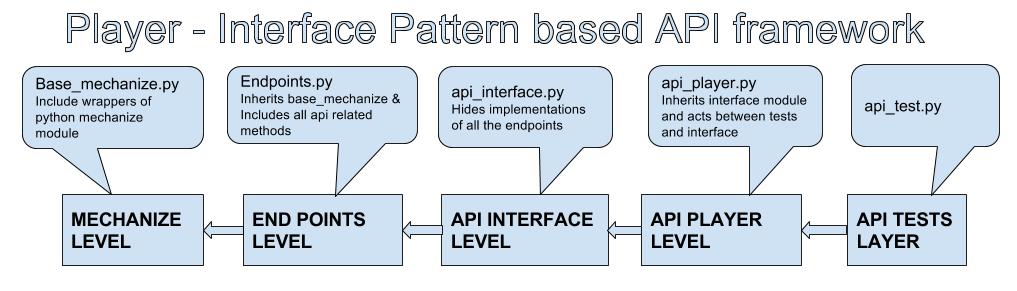

Following image represents workflow of the framework, click on it to enlarge

We’ll spend the next few sections looking at each block along with a useful code snippet.

Base Mechanize Module

We use a Python module called Mechanize to make our REST calls. The Base Mechanize module includes wrappers for the Mechanize API calls like GET, POST, DELETE, PUT. These methods return JSON response with status code or error details with status code for further debugging. Requests is another popular Python library that many people prefer. You can easily substitute Mechanize wrappers for Request wrappers.

code snippet for example:

def get(self,url,headers={}): "Mechanize Get request" browser = self.get_browser() request_headers = [] response = {} error = {} for key, value in headers.iteritems(): request_headers.append((key, value)) browser.addheaders = request_headers try: response = browser.open(mechanize.Request(url)) response = json.loads(response.read()) except (mechanize.HTTPError, mechanize.URLError) as e: error = e if isinstance(e, mechanize.HTTPError): error_message = e.read() print("\n******\GET Error: %s %s" % (url, error_message)) else: print(e.reason.args) # bubble error back up after printing relevant details raise e return {'response':response,'error':error} |

ENDPOINTS LAYER

The endpoints layer abstracts the endpoints of the application under test. We create one endpoints/[$feature]_endpoint.py file. Each [$feature]_endpoint.py file contains a class that has methods related to all the endpoints for that features. For example, our cars_app.py application has an endpoint (/register) related to registration. The [$feature]_endpoint.py should not contain any business logic or test logic. It should simply be making REST calls to specific endpoints and returning the data it sees. You could choose to return everything that the REST call has in its response or reorder the response with just the bits you want.

code snippet for example

""" API endpoints for Registration """ from Base_Mechanize import Base_Mechanize class Registration_API_Endpoints(Base_Mechanize): "Class for registration endpoints" def registration_url(self,suffix=''): """Append API end point to base URL""" return self.base_url+'/register/'+suffix def register_car(self,url_params,data,headers): "register car " url = self.registration_url('car?')+url_params json_response = self.post(url,data=data,headers=headers) return { 'url':url, 'response':json_response['response'].read() } |

INTERFACE LAYER

This layer is a composed interface of all the API endpoint classes. In our cars_app.py application we have features related to car details, registration and users.

code snippet for example :

""" A composed interface for all the API objects Use the API_Player to talk to this class """ from Cars_API_Endpoints import Cars_API_Endpoints from Registration_API_Endpoints import Registration_API_Endpoints from User_API_Endpoints import User_API_Endpoints class API_Interface(Cars_API_Endpoints,Registration_API_Endpoints,User_API_Endpoints): "A composed interface for the API objects" def __init__(self, url): "Constructor" # make base_url available to all API endpoints self.base_url = url |

PLAYER LAYER

This is the layer where business logic and test context is maintained. The player layer does the following:

- API_Player serves as an interface between Tests and API_Interface

- Contains several useful wrappers around commonly used combination of actions

- Maintains the test context/state

For this example, we are going to add a method get_cars() that gets session information, creates headers, makes a call to the API interface’s get_cars(), processes the result and passes it to whoever called it.

code snippet for example :

class API_Player(Results): "The class that maintains the test context/state" def __init__(self, url, log_file_path=None): "Constructor" super(API_Player, self).__init__( level=logging.DEBUG, log_file_path=log_file_path) self.api_obj = API_Interface(url=url) def set_auth_details(self, username, password): "encode auth details" user = username password = password b64login = b64encode('%s:%s' % (user, password)) return b64login def set_header_details(self, auth_details=None): "make header details" if auth_details != '' and auth_details is not None: headers = {'content-type': 'application/json', 'Authorization': 'Basic %s' % auth_details} else: headers = {'content-type': 'application/json'} return headers def get_cars(self, auth_details=None): "get available cars " headers = self.set_header_details(auth_details) json_response = self.api_obj.get_cars(headers=headers) json_response = json_response['response'] result_flag = True if json_response['successful'] is True else False self.write(msg="Fetched cars list:\n %s"%str(json_response)) self.conditional_write(result_flag, positive="Fetched cars", negative="Could not fetch cars") return json_response |

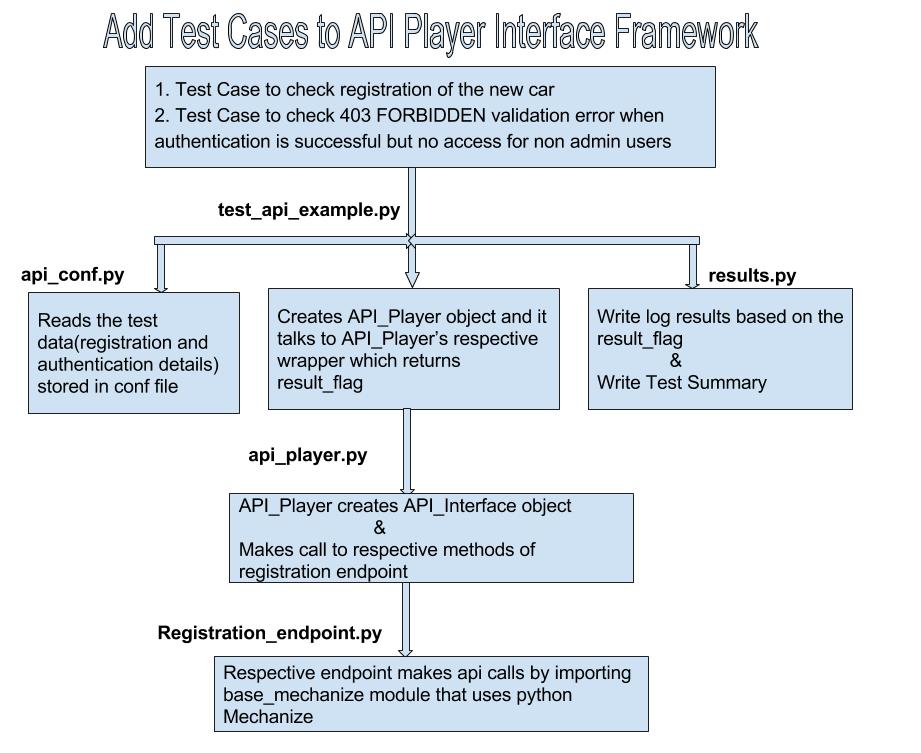

Examples for adding few API Test Cases to Player-Interface pattern based Framework

In this section, we’ll show you examples of writing a couple of tests.

- Test Case to check registration of the new car

- Test Case to check validation error for the given invalid authentication details

Test cases code snippets

# add cars car_details = conf.car_details result_flag = test_obj.add_car(car_details=car_details, auth_details=auth_details) test_obj.log_result(result_flag, positive='Successfully added new car with details %s' % car_details, negative='Could not add new car with details %s' % car_details) # test for validation http error 403 result = test_obj.check_validation_error(auth_details) test_obj.log_result(not result['result_flag'], positive=result['msg'], negative=result['msg']) |

API_Player

def register_car(self, car_name, brand, auth_details=None): "register car" url_params = {'car_name': car_name, 'brand': brand} url_params_encoded = urllib.urlencode(url_params) customer_details = conf.customer_details data = customer_details headers = self.set_header_details(auth_details) json_response = self.api_obj.register_car(url_params=url_params_encoded, data=json.dumps(data), headers=headers) response = json.loads(json_response['response']) result_flag = True if response['registered_car']['successful'] == True else False return result_flag def check_validation_error(self, auth_details=None): "verify validatin error 403" result = self.get_user_list(auth_details) user_list = result['user_list'] response_code = result['response_code'] result_flag = False msg = '' "verify result based on user list and response code" if user_list is None and response_code == 403: msg = "403 FORBIDDEN: Authentication successful but no access for non admin users" elif response_code == 200: result_flag = True msg = "successful authentication and access permission" elif response_code == 401: msg = "401 UNAUTHORIZED: Authenticate with proper credentials OR Require Basic Authentication" elif response_code == 404: msg = "404 NOT FOUND: URL not found" else: msg = "unknown reason" return {'result_flag': result_flag, 'msg': msg} |

Running Tests

There are two steps to run this test:

- Start the cars application

- Run the test script

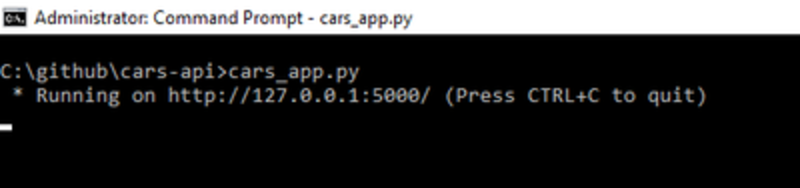

1. Start the cars application

You simply need to navigate to where you have your cars_app.py file and then run python cars_app.

2. Run the test script

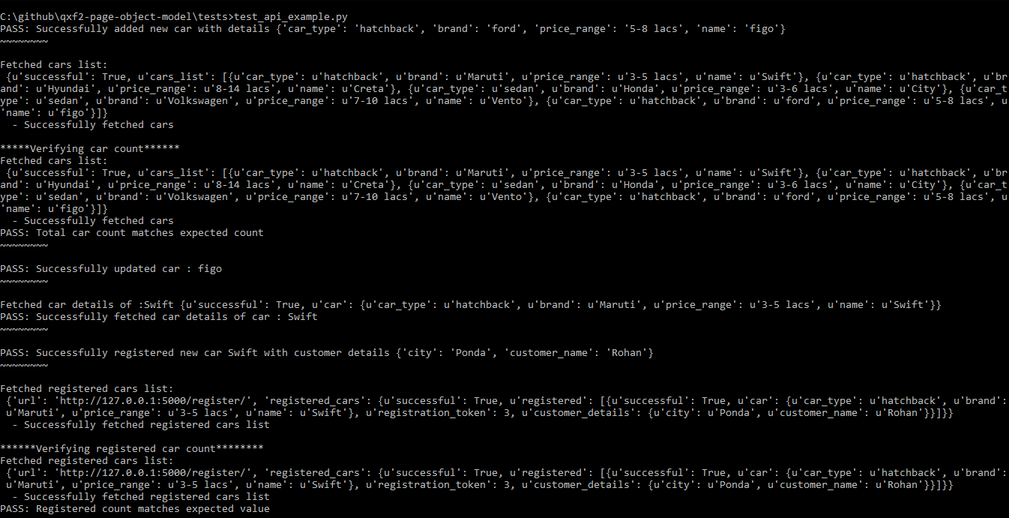

qxf2-page-object-model\tests>python test_api_example.py

If things go well, you should see an output similar to the contents below

Code is available on GitHub

1. Qxf2’s test automation framework: https://github.com/qxf2/qxf2-page-object-model

2. The cars application: https://github.com/qxf2/cars-api

If you liked this article, learn more about Qxf2’s testing services for startups.

Hire QA consultants from Qxf2

This post was possible only because of Qxf2’s commitment to open-source software. Surprised that a testing company continually contributes to open-source? Well, we have always been testers with strong technical skills working in a fully-remote company that has a strong open-source culture. We also offer several unique and tailored QA solutions for startups and early-stage products that most folks are not aware of.If we sound like the kind of QA consultants you want to work with, reach out today!

I have around 10 years of experience in QA , working at USA for about 3 years with different clients directly as onsite coordinator added great experience to my QA career . It was great opportunity to work with reputed clients like E*Trade financial services , Bank of Newyork Mellon , Key Bank , Thomson Reuters & Pascal metrics.

Besides to experience in functional/automation/performance/API & Database testing with good understanding on QA tools, process and methodologies, working as a QA helped me gaining domain experience in trading , banking & investments and health care.

My prior experience is with large companies like cognizant & HCL as a QA lead. I’m glad chose to work for start up because of learning curve ,flat structure that gives freedom to closely work with higher level management and an opportunity to see higher level management thinking patterns and work culture .

My strengths are analysis, have keen eye for attention to details & very fast learner . On a personal note my hobbies are listening to music , organic farming and taking care of cats & dogs.

thanks for the detailed steps on API automation Rajeswari. I tried installing Mechanize on Python 3.6 and got an error saying it is not compatible. Can you please confirm if your are running the above code on Python 2.x? . Since Mechanize doesn’t support Python 3.x, just curious to know how you are utilizing it for your API automation.

Hi Pradeep,

Yess, we run above code in Python 2.x.We are in the process of migrating code to python 3 and possible replacement for mechanize would be to use requests module as it has python 3 compatibility

Hi Rohan,

I like your framework, and would like to give a try, but I also have this Mechanize is only for Python 2 problem. Do you have any update for that ?

Thanks a lot

Hi Chun Ji,

Mechanize will be available for Python3 too this month. For now, you can refer to the branch here – https://github.com/qxf2/qxf2-page-object-model/tree/Python2-Python3.

Thanks

Smitha

Hi Rohan,is this migrated to Python 3. Did you guys migrated to requests lib

Hi,

Yes, we are using requests library for Python 3. You can find the details here – https://qxf2.com/blog/qxf2-automation-testing-framework-supports-python-3/

Thanks Rohan for the quick response!! 🙂

My 2 cents.

Regarding how to judge if a API Call is success or not, should the judgement be put at each individual Test Case level, instead of this “API PLayer” level ? Let’s say my running sequence is “GET” => “PUT” => “GET”, the response from the first get and the 2nd get would be different. “API Player” may do some general checking, but not the specific pieces.

Thanks,

Jack

Thankyou Chun ji. You have given a valid suggestion but we do not want to implement because we have a good reason to do that way.

For your understanding, let’s say for example API Player contains a GET method called ‘get_doc_details’ with the below condition

Imagine that API Tests has used above GET call in multiple test steps. Later on, suppose if there is a change in the API call condition

result['successful']instead ofresult['success']. Current design will help us from updating the condition across multiple steps of different test cases instead we could update only in API Player which is enough.