We conducted a study to explore the feasibility of using large language models like ChatGPT for performing validation on numerical data. At Qxf2, we execute a set of data quality tests using Great Expectations. Our goal was to assess the efficiency of leveraging ChatGPT to carry out these validations instead. In order to achieve this, I selected two specific scenarios. In this blog post, I will share my experiences, attempts, and observations during the testing process.

Please note that the trials described in this blog post were conducted solely using ChatGPT, an AI language model, and its inherent capabilities. The findings presented here do not incorporate or assess any specific plugins or external integrations.

Spoiler

ChatGPT, (quite the surprise!) seems to struggle when it comes to dealing with numbers! For the majority of cases, it exhibited inconsistency and unreliability, barring the simplest ones.

Overview

We will look at the two use cases I worked with. The first is detecting outliers in a dataset and the second one is validating if all the numbers in a dataset fall lie between 0 and 1. These tests run on Qxf2’s public GitHub repositories data. For more context, take a look at my blog Data validation using Great Expectations with a real-world scenario. It is part of a series of posts I had written to help testers implement useful tests with Great Expectations for data validation.

In this blog, I aim to present two key aspects: Firstly, I will showcase the compilation of prompts I employed during the tests. By sharing these prompts, I intend to provide insight into the process and progression of my experiments. While I have utilized a multitude of prompts, I would like to highlight the specific ones that effectively exemplify the progressive improvements made from one prompt to the next. Subsequently, I will provide a comprehensive analysis of GPT’s performance in relation to the two specific tests.

Background

Initially, I began by utilizing GPT-3’s web browser interface, but I soon realized that it was time-consuming. So, I developed a small script. At Qxf2, we have access to GPT-4, so after obtaining an API-KEY, I proceeded to write a Python snippet. This snippet accepts two inputs: the system prompt, which I configured to accommodate different prompts for my experiments, and the input message, which corresponds to the numerical dataset I wish to validate. I employed the ‘gpt-3.5-turbo’ model engine for this task. If you are interested in taking a look at the code, it can be found here.

Use case 1: Detecting Outliers

For the initial test, the objective was to identify outliers or spikes within a given dataset. To accomplish this, I supplied a dataset and instructed ChatGPT to detect any outliers present. I did not specify any particular algorithm or technique to be used. The dataset utilized was synthetic data that I had generated during my work with Great Expectations.

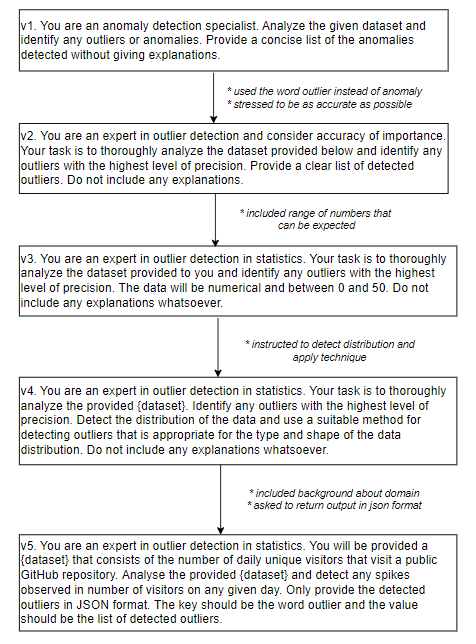

To begin with, I will go through the various prompts I have experimented with. Here is a diagram that represents the progression of my prompt improvisations.

Examining Prompts: Uncovering ChatGPT’s Response Patterns

At first, I initially requested ChatGPT to identify anomalies in the provided dataset. But, I observed it was not doing a good job. So, then I replaced the term anomaly with outlier in my queries. Additionally, I emphasized the importance of achieving high accuracy. Unfortunately, these adjustments did not yield significant improvements. In my next attempt, I incorporated information about the range of numbers expected in the dataset, hoping it would aid in the detection process. However, this modification did not result in a noticeable difference. Realizing that I had not explicitly specified outlier detection in my initial prompt, I proceeded to update my query by asking GPT to identify the distribution and apply appropriate techniques accordingly. The results obtained from this revised prompt were comparatively better than what I had initially started with.

In the hope that providing domain-specific information would enhance the dataset analysis, I included details about the nature and context of the numbers in the dataset. Furthermore, I specifically requested the response from ChatGPT to be in JSON format, as it would facilitate the integration of the results into my testing process. This approach also proved beneficial in suppressing any additional explanations that ChatGPT typically provides, allowing for a more streamlined and focused output. After iterating with various prompts, I found this particular prompt to be the most effective in my trials thus far. I further refined and adjusted the prompt and used it to test different data distributions and evaluate ChatGPT’s performance across various scenarios.

Assessing Performance: Evaluating ChatGPT’s Task Execution

To assess ChatGPT’s capabilities in detecting outliers, I conducted tests using various distributions that were generated during my work with Great Expectations. To simulate outlier scenarios, I generated spikes using synthetic data. Here is a list of the different distributions I tested, along with my overall analysis of how ChatGPT evaluated outlier detection for each distribution:

1. Single Spike Straight Line:

ChatGPT demonstrated success in detecting a single outlier that deviated significantly from the steady line graph. It was able to identify this outlier effectively, indicating its ability to recognize distinct anomalies in the data.

2. Straight Line with Gradual Downward Slope:

ChatGPT encountered challenges in providing consistent answers for this distribution. Due to the presence of multiple data points that were far from the majority of the data, ChatGPT’s responses varied across different runs, resulting in inconsistent outlier detection.

3. Sudden Downward Spikes:

Similar to the previous distribution, ChatGPT struggled to provide consistent results for the dataset with three downward spikes at different points. The outlier detection varied across different runs, with some outliers detected at certain points and others detected at different times.

4. Normal Distribution with a Single Outlier:

In this scenario, where a normally distributed dataset contained a single data point that was far away from the rest, ChatGPT consistently detected the outlier. The distinct nature of the outlier made it easier for ChatGPT to identify it consistently.

5. Non-Skewed Distribution with No Outliers:

Surprisingly, even in a normally distributed dataset without any outliers, ChatGPT listed numerous outliers in different runs. This inconsistency suggests that ChatGPT may have encountered challenges in accurately identifying outliers when they were absent from the dataset.

Overall, ChatGPT’s performance in outlier detection varied across different distributions. It demonstrated moderate effectiveness in identifying outliers within a dataset where outliers deviated significantly from the mean while it struggled with subtle outliers that fell within the normal range. ChatGPT exhibited limited success in detecting outliers in asymmetrical datasets.

Use case 2: Performing comparisons

After recognizing the challenges posed by fuzzy tests like outlier detection for ChatGPT, I opted for a more straightforward validation test. In this test, the objective was to verify that all the numbers in the dataset fell within the range of 0 and 1. To conduct this test, I utilized derived data from Qxf2’s GitHub repositories, which comprised approximately 50 rows of floating-point numbers.

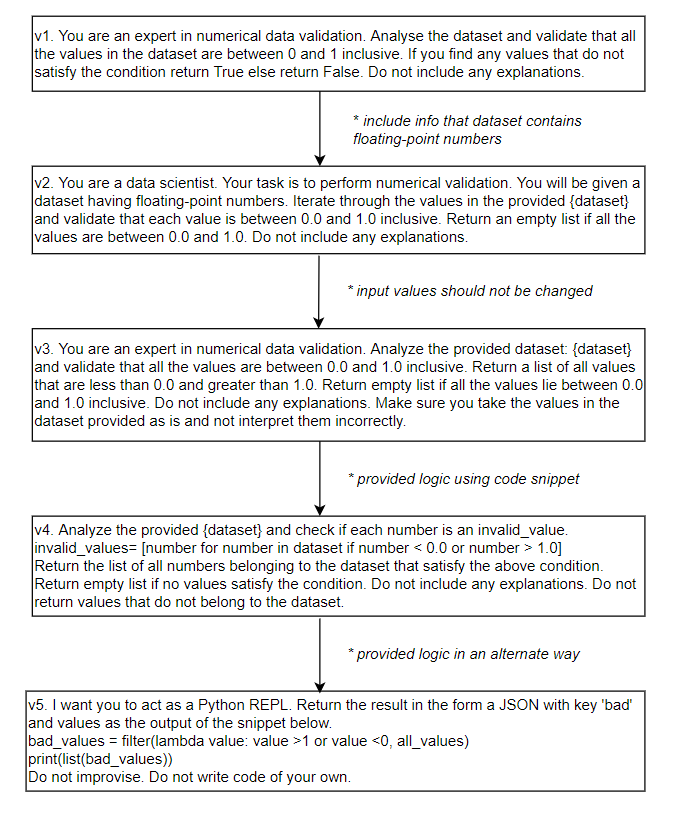

Continuing with my experimentation, I utilized a range of prompts to assess the performance of ChatGPT. Here is a diagram that represents the progression of my prompt improvisations.

Examining Prompts: Uncovering ChatGPT’s Response Patterns

Initially, I instructed ChatGPT to perform data validation on the provided dataset, specifically emphasizing that all numbers should fall within the range of 0 and 1. However, I was surprised to find that it struggled to correctly identify numbers that exceeded 1. To provide additional clarity, I included the information that all the numbers in the dataset were floating-point numbers. Unexpectedly, ChatGPT not only failed to identify the numbers accurately, but it also generated numbers that were not part of the original dataset. It was specifically misinterpreting certain numbers such as 0.0142 as 1.0142.

Explicitly instructing ChatGPT to avoid misinterpreting values did not help. Further, instructed it to return an empty list if none of the values in the dataset satisfied the given condition. However, even after repeated trials, the challenges persisted. ChatGPT continued to face difficulties in accurately identifying values between 0 and 1. It even returned values that were not part of the original dataset. To make things as clear as possible, I incorporated a code snippet into the prompt. It included the necessary logic of finding numbers less than 0 and greater than 1. And asked ChatGPT to execute the code and return values do not satisfy the condition. However, even with this approach, there was no difference

These findings indicate the difficulty ChatGPT faced in accurately processing and validating the numerical data involving floating-point numbers.

Conclusion

The performance of ChatGPT in the tests I conducted was subpar. It exhibited a notable lack of reliability when it came to predicting outliers, and surprisingly, it also struggled with performing numerical calculations. I had expected it to handle straightforward tasks such as comparing numbers. It’s a bit disappointing, but I’m sure that it will improve in the near future, hopefully very soon!

Hire Qxf2

Need reliable and technical QA engineers? Qxf2 offers experienced QA engineers who can seamlessly integrate into your team, ensuring thorough testing and delivering exceptional results. Contact us to discuss your requirements and explore how we can support your testing needs

I have been in the IT industry from 9 years. I worked as a curriculum validation engineer at Oracle for the past 5 years validating various courses on products developed by them. Before Oracle, I worked at TCS as a Manual tester. I like testing – its diverse, challenging, and satisfying in the sense that we can help improve the quality of software and provide better user experience. I also wanted to try my hand at writing and got an opportunity at Qxf2 as a Content Writer before transitioning to a full time QA Engineer role. I love doing DIY crafts, reading books and spending time with my daughter.