A lot of research and development activity is going towards producing self-driving cars. Google and Tesla are playing a major role. We read many articles to figure out what all the fuss was about. And before we knew it, we were hooked. We even tried to imagine where we, testers, could play a role. We ended up building a configurable pothole (more on that later) that developers could use to sanity-check their pothole detection algorithms. We are going to share our learning and work in this four-part series. Here is a preview of our work:

In this post, we have summarized the key ideas and technologies that we read about, how self-driving cars seem to work, the different type of sensors used, and some things self-driving cars still find hard to handle. We’ll also give you a glimpse of our thought process around why we chose to build a configurable pothole.

How self-driving cars work

The self-driving car is a robotic vehicle which is designed to drive safely without a human driver. The self-driving car is also known as an autonomous or driverless car. They use a combination of sensors and software to control, navigate and drive the car. Google, Tesla, Uber, Nissan are some of the major automakers that have developed the various self-driving technologies.

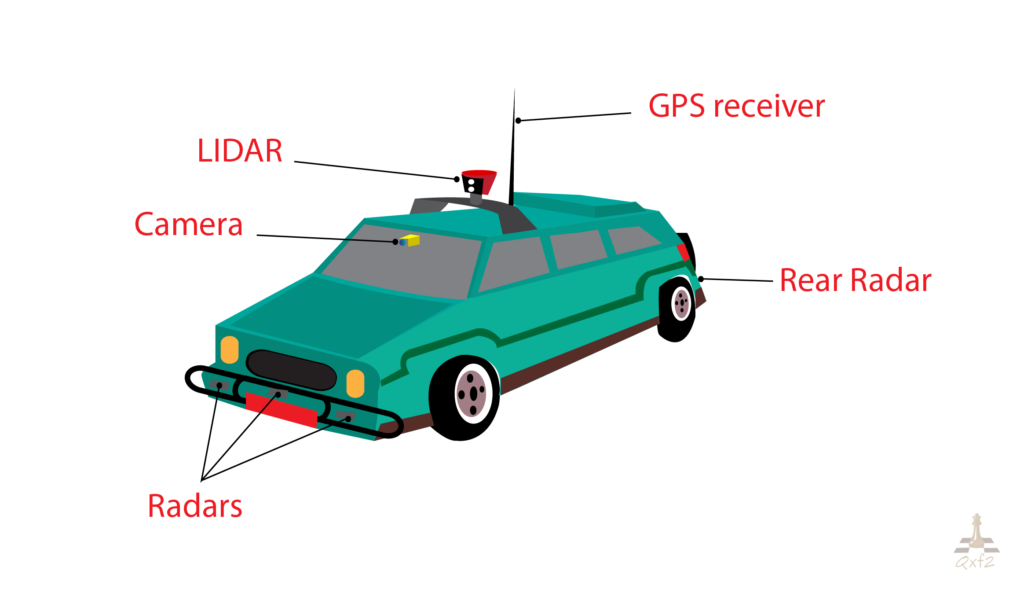

Once, a user provides the destination, self-driving car software uses a GPS system and motion sensors to determine its exact location and calculate the route. Companies use a combination of cameras, LiDARs (Light Detection and Ranging), radars and detailed terrain knowledge to ‘see’. A roof mounted rotating LiDAR (RADAR with light instead of radio waves) is a sensor which monitors 60 to 100-meter range around the car and creates a dynamic 3D map of surrounding environment. Radar sensors placed at the front and rear bumper used to calculate the distance to obstacles and helpful for cruise control system. Along with LIDAR and radars, these cars use cameras for detecting traffic signals and any moving objects on the road. The outputs of all these sensors provided to the processor. The processor will process all the outputs of the sensor along with hard-coded rules, obstacle avoidance algorithm, predictive modeling and then plot a path and provide instruction to vehicle actuators which handle steering, acceleration, and braking.

Google seems to be championing the use of LiDAR. Tesla, as of now, is choosing not to use LiDARs. LiDARs are costly today, but we think (we are extremely new to this area, so take our statements with a pinch of salt) LiDAR will become cheaper and it will be the technology that will win this race.

LiDAR and LiDAR Types

LiDAR stands for Light Detection and Ranging. The working principle of LiDAR is similar to Radar. The only difference is that Radar only provides a distance of object whereas LiDAR provides the shape and size of the object along with distance. LiDAR is used to measure the distance of objects relative to the position of the car in 3-D. There seem to be different types of LiDAR available in the market. Based on our reading, these are the popular types of LiDAR:

- Airborne LiDAR: The infrared laser light is emitted toward the ground and returned to the moving airborne LiDAR sensor. There are two types of Airborne LiDAR:

- Topographic LiDAR (used to derive ground surface models)

- Bathymetric LiDAR ( used to establish water depths and shoreline elevations.)

- Terrestrial LiDAR: It collects very dense and highly accurate points, which allows precise identification of objects. There are two main types of terrestrial LiDAR:

- Mobile LiDAR (used to analyze road infrastructure and locate encroaching overhead wires, light poles, and road signs near roadways or rail lines.)

- Static LiDAR (used for engineering, mining, surveying, and archaeology.)

Challenges for self-driving cars

Here I have summarized few things which self-driving cars/autonomous cars can’t handle well.

- Struggle to drive on bridges: Bridges don’t have many environmental cues like surrounding buildings, so it’s hard for the car to figure out where it is on the map.

- Bad Weather: Driverless cars stay in their lanes by using cameras that track lines on the pavement. But they can’t do that if the road has a coating of snow. Heavy snow and rain also tend to confuse LiDAR sensors and cameras to identify objects. Mercedes-Benz already offers a car with 23 sensors that detect guardrails, barriers, oncoming traffic and roadside trees to keep the vehicle in its lane even on roads with no white lines. But the use of a number of sensors increases system complexity and price of the car.

- Hard to drive in cities: Cities are a mess of pedestrians, cars, potholes, traffic cones. Driverless cars have a lot to keep track of, and it can be easy to miss something. It can also be difficult for the driverless car’s GPS to locate properly in cities.

- No communication between vehicles: Driverless car not able to interact with other car drivers for guidance. Need to do vehicle-to-vehicle or V2V communication which is similar to how airplanes avoid each other in the air.

- Hard to take tough decisions: Assume the driverless car is at higher speed and suddenly a kid comes in front of the car. Now the driverless car has only two options. First, hit the kid and second, take right and hit the light pole. In both cases, human injuries happen. Should driverless car give priority to the pedestrians or the passengers? There is no concrete solution to this kind of situations.

- Mapping Roads: High degree of mapping is needed for driverless cars. Very few roads have been mapped to this degree. Moreover, maps can become out of date as road conditions change.

- Potholes: Identification potholes are tough. They lie below the road surface, not above it. A dark patch in the road ahead could be a pothole. Or an oil spot. Or a puddle. Or even a filled-in pothole.

To know more about above challenges refer to reference no. 9 and 10 mentioned at end of post

Why did we decide to build a configurable pothole?

We are testers, so we are looking at this issues from the testing point of view. We don’t have any driver-less car or any sensor for testing. Still, we want to contribute. We really think developers of algorithms are going to be looking for ways to rapidly sanity-test their changes before running them through detailed and rigorous tests. That means, we need to come up with testing solutions that fit on a developer’s workbench and allow for a range of tests to be performed. It also means we need to keep the cost of such testing solutions low enough that every developer could be rigged with a testing setup.

We chose potholes because they are a common sight on our (Indian) roads. A lot of accidents happen just because of potholes on road. So, detection of potholes is an important enough problem that we think all autonomous car developers are solving. Imagine you are a developer writing algorithms for pothole detection. You would, ideally, like to test your algorithm against a number of potholes of different sizes and shapes. So we decided to develop configurable pothole. To know more about the configurable pothole, please check out our next blog here.

If you are a startup finding it hard to hire technical QA engineers, learn more about Qxf2 Services.

References:

We read a lot to learn more about self-driving cars. Here are some of the best articles that helped us:

- Google’s self-driving car project

- How Google’s self-driving car works

- How Google’s self-driving cars are tested

- Self-Driving Cars: The Good, The Bad, and The Future

- Volvo reveals how its driverless cars will work

- The Google driverless car can repair itself on its own

- Diagnosis and repair for autonomous vehicles

- The ‘Ultra Puck’ will help with real-time, 3D mapping

- Leddar Sensors Road Test for ADAS and Autonomous Driving

- 5 Things That Give Self-Driving Cars Headaches

- 6 scenarios self-driving cars still can’t handle

- Self-Driving Cars Won’t Work Until We Change Our Roads and Attitudes

- Types of LiDAR

- Top Companies in the Global Automotive LiDAR Sensors Market

- LiDAR Innovations

I love technology and learning new things. I explore both hardware and software. I am passionate about robotics and embedded systems which motivate me to develop my software and hardware skills. I have good knowledge of Python, Selenium, Arduino, C and hardware design. I have developed several robots and participated in robotics competitions. I am constantly exploring new test ideas and test tools for software and hardware. At Qxf2, I am working on developing hardware tools for automated tests ala Tapster. Incidentally, I created Qxf2’s first robot. Besides testing, I like playing cricket, badminton and developing embedded gadget for fun.