Qxf2 was intrigued by the rising trend of LLMs. We decided to venture beyond ChatGPT. With Auto-GPT’s increasing popularity and the widespread claims, we were eager to explore its capabilities. Given my background as an engineer, I was particularly equipped to dive into the intricacies of Auto-GPT. And as curious tester, I wanted to get a sense of how we could potentially leverage it.

Why this post?

This blog post is not a comprehensive technical guide or an in-depth tutorial on setting up and using Auto-GPT. Numerous blog posts are available that can assist you in the setup process. You can refer to the official documentation for detailed instructions. Instead, our aim is to share our own experience and highlight some useful configuration features that we found beneficial for those who are new to exploring Auto-GPT.

Our use case

We opted to utilise the Auto-GPT docker image to run Auto-GPT. This proved to be a convenient and efficient choice. Once our setup was complete, we promptly proceeded to write code and conduct API tests using Auto-GPT. We were impressed by Auto-GPT’s proficiency in comprehending code blocks and generating tests when provided with clear instructions. However, our desire to delve deeper into Auto-GPT’s implementation of Agents for self-prompting led us to opt for a slightly more demanding task. This task not only promised benefits but also aimed to enhance our comprehension of the system.

We want to (eventually) publish blog posts in German. So we chose the task of translating one of our blog posts into the German language. This task not only allowed us to explore Auto-GPT’s capabilities but also proved to be personally beneficial as we pursued our goal of multilingual content creation.

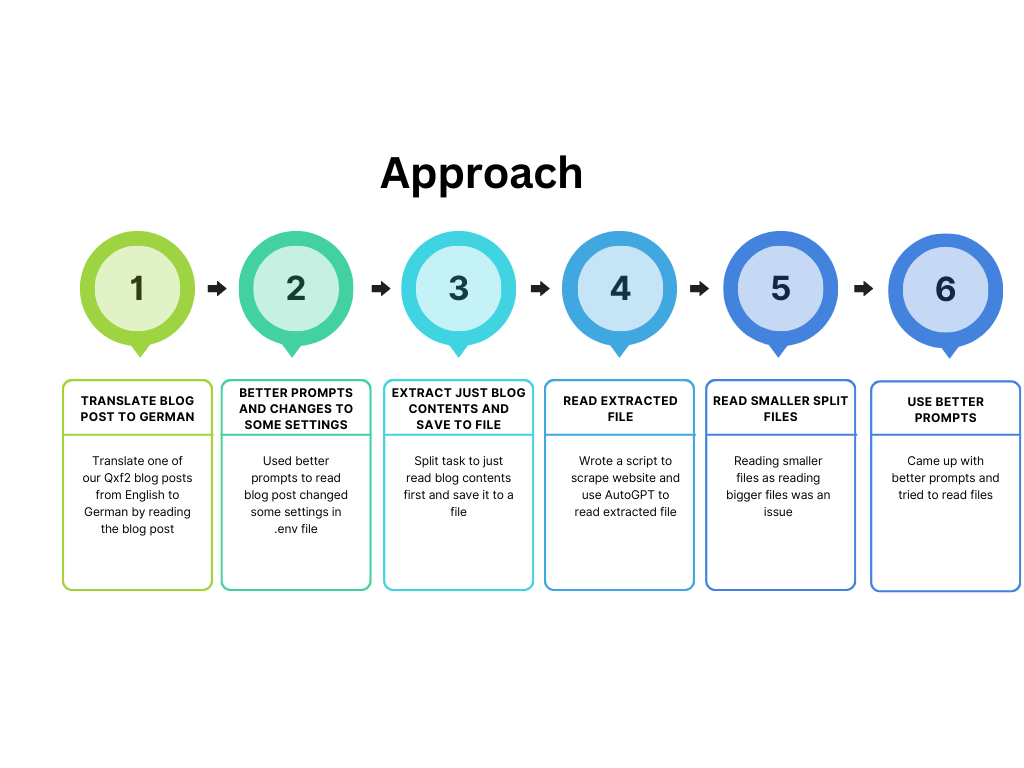

Approach

To be more specific, our objective was to utilise Auto-GPT to read and translate a blog post into the German language. Our intention was to save the translated file to our local system, enabling us to create and publish the blog post using WordPress. By incorporating Auto-GPT into our workflow, we aimed to streamline the translation process and facilitate the seamless publication of multilingual content on our WordPress platform.

We tried different approaches throughout our exploration of Auto-GPT. We adapted our expectations based on our growing knowledge of AutoGPT’s limitations. All the trials listed below are our attempt in achieving the objectives based on our learnings from previous trials. Along the way, we discovered configuration insights. This will help more technical newcomers to this domain. By sharing these learnings, we aim to empower others diving into the world of Auto-GPT.

Trial 1: Accurately translate one of the Qxf2 blog post to German while maintaining the original meaning and tone of the content.

Once we set this Objective, the Goals Auto GPT came up were good. But based on its thoughts and plan the action it took didn’t seem right. Some of the actions which didn’t seem right were:

* Analyzing code blocks without browsing for url and reading the blog post.

* Look for analysis tool like pylint or flake8 to improve code quality.

* Look for a translation service and try to translate the blog.

These steps may be suitable for publishing a new blog. But our objective was not to enhance the quality of our code. Instead, we wanted Auto-GPT to handle just the the translation.

Learnings: The task appeared challenging for AutoGPT. Attempting to redirect it proved to be difficult. As a result, we made the decision to improve the prompt and give it another try. Additionally, we acquired knowledge about certain settings that allow us to disable code analysis in the .env file.

DISABLED_COMMAND_CATEGORIES=autogpt.commands.analyze_code

Trial 2: Better prompts to achieve the goals and better use of .env file settings for directing Auto-GPT

By disabling the “analyze_code” command in Auto-GPT env file and utilizing an improved prompt including steps to browse the URL, we were able to obtain a summary of the blog and perform a translation. However, Auto-GPT fell short in fully reading our blog and providing a comprehensive translation. Despite attempting various approaches, by tweaking the prompts like “do a line by line translation of the blog,” the output from Auto-GPT still presented its own version of the blog instead of achieving a complete translation or line-by-line translation of the blog.

Learnings: This task also seemed too big a step for Auto-GPT. We probably needed to break down our task further. Also, Auto-GPT occasionally attempted to use external tools like Google Translate or some other online translation services. We needed to improve our prompt to force Auto-GPT to use a GPT agent for translation.

Trial 3: Split the task and attempt to download the contents of the blog as the first step

To further simplify the task, we decided to divide it into smaller parts. Our plan involved extracting the contents of the blog and saving them to a file. We utilized scraping and printing techniques to achieve this, resulting in the blog contents being stored in a file. However, the output still did not meet our desired expectations. Auto-GPT attempted to interpret the blog and generated its own version of it. We also experimented with adjusting the Temperature settings as mentioned in the .env file cheat sheet, but unfortunately, it did not yield significant improvements.

Learnings: Direct translation of the blog proved to be impossible. We decided to scale down our goal and help with extraction of blog using a scraper.

Trial 4: Read extracted file using Auto-GPT

In order to overcome the challenges with direct translation, we made the decision to develop our own web scraper to download the blog contents and save them to a separate file. We then attempted to utilize Auto-GPT to read the file and perform the translation. However, Auto-GPT encountered a token size issue when processing large content from the file. We had previously observed this problem when it attempted to read the entire blog post.

Learnings: In the .env file, there is a setting called FAST_TOKEN_LIMIT=4000; however, this limit is primarily related to the Chat GPT’s own constraints. For the GPT-3.5 model, the maximum token limit stands at 4096 tokens. Although this limit was increased with the introduction of GPT4, it still does not meet the requirements for this particular task. Consequently, we made updates to the web scraper to download the blog and split it into multiple files, each containing approximately 1000 words. Additionally, we utilized the ‘start_agent’ command to create a new GPT agent, enabling us to use the key of the new agent for translating the split files.

Trial 5: Read and translated smaller file

We set the goal to instruct Auto-GPT to read all the files in the directory which starts with a specific string and translate each file individually. Auto-GPT attempted to execute Python or Bash commands to list the files, but unfortunately, these commands were not successful in reading the files as intended.

Learnings: EXECUTE_LOCAL_COMMANDS should be set to True. Also come up with better prompt to instruct Auto-GPT what command to run while setting goals itself. In the $/Auto-GPT/logs directory, you will discover the activity.log and error.log files containing detailed information about your runs. These logs proved invaluable in analyzing the issues encountered with previous prompts and devising better ones.

Trial 6: Better prompts

After implementing the necessary corrections, we made the following attempt. We set the the below Goals for our Translator AI

Name: Translator AI

Role: Translator of multiple text files from English to German using GPT agent

Goals:

– Use the `grep` command to search for files starting with ‘blog_output’

– Use the ‘start_agent’ command to create a new GPT agent

– Read each of those text and translate it to German using GPT agent

– Save the translated output of each file to a single file

The below video shows the run log for the Goals we set and how Auto-GPT generated the Plan and commands to achieve the goals

The given prompt proved successful, allowing Auto-GPT to translate one file after efficiently searching through the file list and saving it to a new file. However, it encountered difficulties in translating the subsequent files as it either generated unrelated and unnecessary action items or some errors it encountered as shown in video log.

Stopping the trials

These were just some highlights of the difficulties a technical tester like me faced while trying to use the tool. There were a lot more additional trials involved playing around the prompts and settings to get to this point. At this point, my patience started to wane due to these challenges. Additionally, we noted inconsistency in the performance of Auto-GPT, tasks taking too long as it tries to figure out next steps and issue reading bigger token file. Also we noticed few errors repeatedly like The JSON object is invalid and The following AI output couldn’t be converted to a JSON

Our takeaway

Overall, we acknowledge the considerable potential of this tool. However, we find that its generated goals and prompts do not align with our expectations, requiring extensive direction on our part. In such cases, it becomes more convenient to use ChatGPT directly. Nonetheless, we maintain a positive outlook and intend to revisit this tool in the future for further exploration and experimentation.

Hire QA from Qxf2 Services

By hiring Qxf2, you gain access to a team of technical testers who not only excel in traditional testing methodologies but also have the expertise to tackle the unique challenges of modern software systems. We go beyond traditional test automation, specializing in testing microservices, data pipelines, and AI/ML-based applications. You can get in touch with us here.

I am a dedicated quality assurance professional with a true passion for ensuring product quality and driving efficient testing processes. Throughout my career, I have gained extensive expertise in various testing domains, showcasing my versatility in testing diverse applications such as CRM, Web, Mobile, Database, and Machine Learning-based applications. What sets me apart is my ability to develop robust test scripts, ensure comprehensive test coverage, and efficiently report defects. With experience in managing teams and leading testing-related activities, I foster collaboration and drive efficiency within projects. Proficient in tools like Selenium, Appium, Mechanize, Requests, Postman, Runscope, Gatling, Locust, Jenkins, CircleCI, Docker, and Grafana, I stay up-to-date with the latest advancements in the field to deliver exceptional software products. Outside of work, I find joy and inspiration in sports, maintaining a balanced lifestyle.