Our pytest failure summary until recently showed only Assertion errors when there was a failure. Even though we printed active logs displaying the failed steps on the console and in the log file, we realized that a more useful failure summary would greatly assist in investigating the error and speeding up our possible future actions.

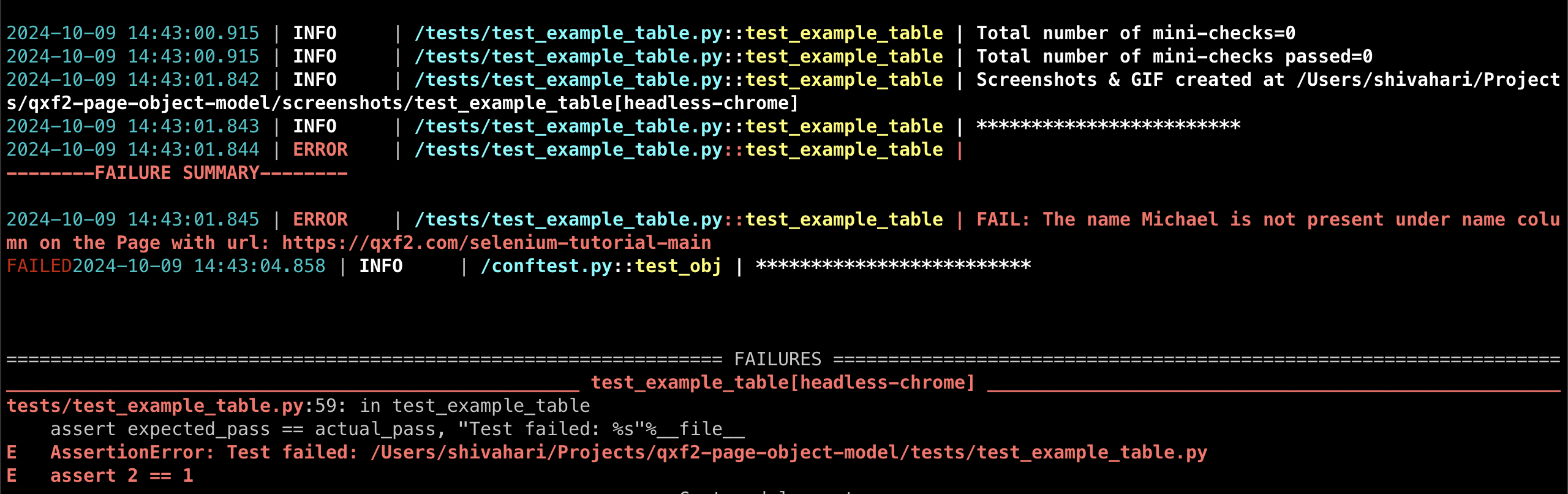

This is how the failure summary used to look:

We noticed and tracked the AssertionError more easily when we ran only a few tests. Tracing failures in a large test suite slowed us down, and sometimes merely raising an AssertionError was not very useful; identifying which scenarios failed in a test run became a time-intensive activity.

In this post we will show you how to create a custom pytest plugin to print failure summaries in a table. This has already been implemented in Qxf2’s popular test automation framework.

Disclaimer: The code snippets attached in this blog post is for demonstration purposes only. We recommend following better coding standards for real-world applications.

Improving our test creation strategy

We design ours tests in way that one validation/scenario failing does not cause the test execution to stop, the test continues to execute all scenarios and finally throws an AssertionError when the total validations do not match the passed scenarios. When our tests failed, we had to sift through the logs to identify and investigate errors. While this approach worked, it was time-consuming, so we decided to enhance the test summary by clearly displaying the failed scenarios. When there are multiple failures in a test run identifying failed scenarios was a burden. We felt there was room for improvement in our strategy.

Qxf2 offers many unique testing services to startups. In one of them, we lay the foundation for good QA by implementing our framework along with a basic test suite. Then, we show developers how to add tests and maintain them. For this group of users (i.e., developers) the test summary had to be better. Developers were not going to comb through logs to identify the first instance of failure.

We brainstormed on possible solutions and implemented:

1. Adding useful messages with assert statements: assert statements accepts a string parameter, it will print the string when there is an AssertionError

2. Better failure messages that starts with FAIL: and colour coding them in red in the logs to make easier to search

3. Creating better failure summaries: Printing errors in a table

Implementing adding useful message with assert statement and better failure was straightforward, for better failure summaries we decided to leverage pytest’s integration capabilities to develop a plugin to print the failure summary using prettytable

Custom pytest plugin for better failure summary

pytest offers numerous benefits and one of its standout feature being the ease of integration with existing tools or the ability to create custom ones when necessary. We were not too satisfied with the available tools for failure summaries we decided to develop our own solution.

We followed these two steps to implement our custom solution:

1. Find out how failure summary is printed and replace it with a custom approach

2. Integrate the fix with pytest

1. Find out how failure summary works and replace it with a custom approach

We identified the Python object responsible for printing failure summaries – TerminalReporter and subclassed it to overwrite the summary_failures method to use prettytable to print the failure sceanrios.

Note: We have subclassed the TerminalReporter object even when it is decorated final using typing module. We figured inheriting from this object will help make code changes minimal and allow other reporter feature work as is.

This is how our Custom Terminal Reporter object looks:

class CustomTerminalReporter(TerminalReporter): "A custom pytest TerminalReporter plugin" def __init__(self, config): self.failed_scenarios = {} super().__init__(config) # Overwrite the summary_failures method to print the prettytable summary def summary_failures(self): if self.failed_scenarios: table = FailureSummaryTable() # Print header self.write_sep(sep="=", title="Failure Summary", red=True) # Print table table.print_table(self.failed_scenarios) |

This new object initializes failed_scenarios attribute, the summary_failures method creates an instance of FailureSummaryTable, the FailureSummaryTable object (which uses prettytable) and the table.print_table step prints the table.

2. Integrate the fix with pytest

To register this new plugin we modified the pytest_configure hook, we added the following snippet:

if config.pluginmanager.has_plugin("terminalreporter"): old_reporter = config.pluginmanager.get_plugin("terminalreporter") # Unregister the old terminalreporter plugin config.pluginmanager.unregister(old_reporter, "terminalreporter") reporter = CustomTerminalReporter(config) # Register the custom terminalreporter plugin config.pluginmanager.register(reporter, "terminalreporter") |

Now that we have registered the new plugin, pytest will use it to print failure summaries.

Changes to our framework

After creating the custom plugin we decided to make some modifications to our framework to:

1. Collect the failed scenarios

2. Make the reporter plugin available as a fixture

3. Share the collected failure scenarios with the reporter plugin fixture

1. Collect the failure scenarios

This is a random scenario from our test:

#Verify if payment was successful result_flag = test_mobile_obj.verify_payment_success() test_mobile_obj.log_result(result_flag, positive="Payment is successful", negative="Payment is not successful") |

The test_mobile_obj.verify_payment_success() method returns a boolean value based on the payment step, the log_result method then uses the boolean value to print positive parameter for success and negative for failure. We modified the log_result method to collect all failure messages:

def log_result(self,flag,positive,negative,level='info'): "Write out the result of the test" if flag is True: self.success(positive,level="success") else: self.failure(negative,level="error") # Collect the failed scenarios for prettytable summary self.failed_scenarios.append(negative) |

2. Make the reporter plugin available as a fixture

We created a fixture – testreporter to make the newly registered terminalsummary object available for our test runner fixture:

@pytest.fixture def testreporter(request): "pytest summary reporter" return request.config.pluginmanager.get_plugin("terminalreporter") |

3. Share the collected failure scenarios with the reporter plugin fixture

We then modified our test runner object fixture – test_obj to pass on the failure list to the failed_scenarios attribute of CustomTerminalReporter object:

# Collect the failed scenarios, these scenarios will be printed as table \ # by the pytest's custom testreporter if test_obj.failed_scenarios: testreporter.failed_scenarios[testname] = test_obj.failed_scenarios |

With these changes, when there is a failure, the plugin prints its failed_scenarios attribute.

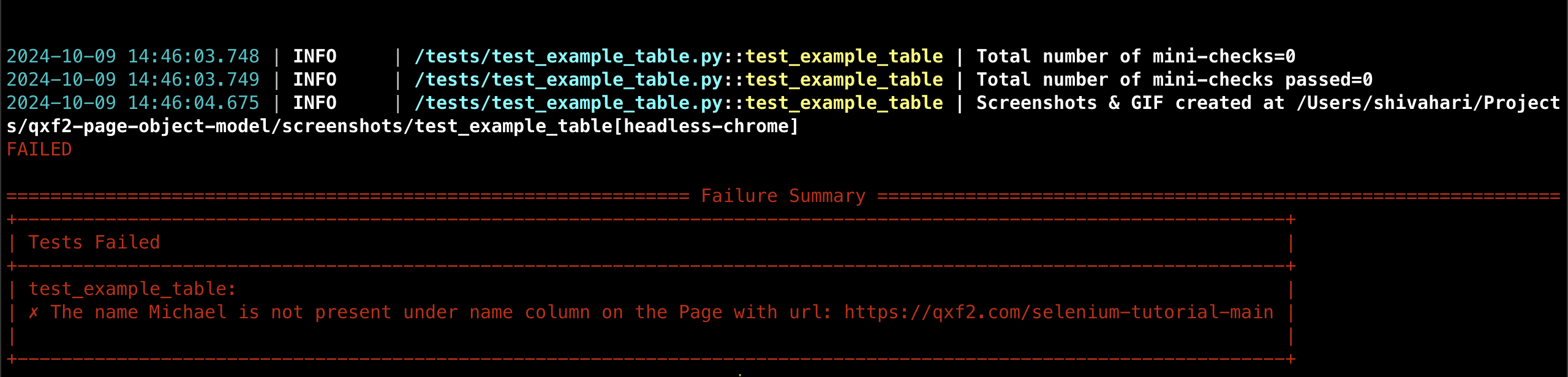

This is how our failure summary now looks:

Mapping test names to failed scenarios makes it easier to understand and fix test automation issues faster. We can now spend our time better by jumping to the crux of the errors instead of searching through logs for the failures.

Hire testers from Qxf2

Qxf2 services makes it a habit to prioritise regular assessments of our process and tools to identify any gap. We then dedicate our resources to address these gaps and actively share our findings with the testing community. This approach keeps us prepared for potential issues we might face at clients. If you are looking for expert testers who can provide valuable recommendations for cutting-edge tools and strategies to enhance your emerging product contact Qxf2.

My expertise lies in engineering high-quality software. I began my career as a manual tester at Cognizant Technology Solutions, where I worked on a healthcare project. However, due to personal reasons, I eventually left CTS and tried my hand at freelancing as a trainer. During this time, I mentored aspiring engineers on employability skills. As a hobby, I enjoyed exploring various applications and always sought out testing jobs that offered a good balance of exploratory, scripted, and automated testing.

In 2015, I joined Qxf2 and was introduced to Python, my first programming language. Over the years, I have also had the opportunity to learn other languages like JavaScript and Shell scripting (if it can be called a language at all). Despite this exposure, Python remains my favorite language due to its simplicity and the extensive support it offers for libraries.

Lately, I have been exploring machine learning, JavaScript testing frameworks, and mobile automation. I’m focused on gaining hands-on experience in these areas and sharing what I learn to support and contribute to the testing community.

In my free time, I like to watch football (I support Arsenal Football Club), play football myself, and read books.