This is the second post in a series of posts outlining the technical and organizational work we are undertaking at Qxf2. This post will help you understand why we are doing something different from a lot of other testing firms.

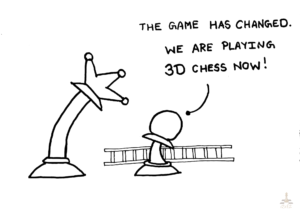

In this post, we will look at three big challenges we think testers will face when tackling some of the emerging areas like IoT, autonomous cars, highly parallel systems, AI, machine learning and data analytics. When reading through these challenges, see if your current testing team knows how to handle them. If your testing team cannot handle these challenges today, then you can understand why we feel the need for changing our approach and focus.

Our expectation

We are not experts. In fact, we are sure that we are late to the game. We think many testers have already faced these problems and we hope that they have found solutions for some of these problems. We are writing in the hope that they share their experience with us.

Challenges for testers in emerging areas

1. There will be a large number of input interfaces in products

Products will be taking input from sensors – not just the keyboard, mouse and touch screens. A good example is the Fitbit which has motion sensors, heart rate sensors, etc. An increasingly large number of input interfaces means that some of our testing will rely on having the right instruments to mimic the different inputs.

Our takeaway: As testers we need to get comfortable with hardware, micro-controllers and electronics.

The future is already here: We know Microsoft was using robotic arms to test the Xbox way back in 2004! But we could only find a 2013 article talking about it.

2. The binary concept of pass/fail will be less useful

Products are going to be relying on AI. They can/will end up giving you different answers to the same question! Also, any changes to a learning algorithm will mean that the algorithm’s accuracy will improve in some places while getting worse in other areas. As an example, think of any change to Google’s search algorithms. The search accuracy could improve for a large number of search keywords but actually get worse for a subset of search keywords. So just saying the new search algorithm ‘passed’ or ‘failed’ is meaningless. We will need better mathematical indicators to express improvement/regression.

Our takeaway: As testers, we need to get comfortable with mathematics and statistics.

The future is already here: Facebook has already faced issues like this. This is one sad example.

3. The QA environment will not mimic Production

It is getting easier and easier to string together different pieces of software and create a useful product. Heck, I created this search engine in less than a day. Each component of the software will likely be hosted on a different system and is probably maintained/updated by someone else. The cost of creating a QA environment that mimics production is going to be exorbitant.

Our takeaway: As testers, we need to learn some systems modeling and DevOps.

The future is already here: Most big technology organizations?

Some other guesses about the future

If our theory about where we are headed is right, all other roles in product development will evolve too. If you are lucky or good or both – you have probably already lived through some of these changes listed below.

1. Cultural clashes between the hardware and software arms of the company. The speed of developing hardware does not match with the speed of software development cycles. This is going to cause significant tension within companies. We think testing could help by providing quick and dirty tests to speed up the hardware prototyping phase.

2. Cost of maintaining a QA environment will be significant. As the cost of QA goes up, it will make economic sense for the organization to spend development time to counter bloated QA environments. So architects must think about scaling testing too! A significant chunk of development time will go into building backdoors for good testing (testing – not necessarily testers).

3. Some bug reports will be more visual and will look like short conference papers. As system complexity goes up, it is not going to be easy to explain your bug reports without having a lot of data to back it up. You will notice testers spending weeks collecting information needed to support their bug reports. Totally bragging: I have filed bugs like that. I once used queuing theory to prove that the discrete event simulator that I was testing used too short of a simulation time. The values we were showing were not what was expected at steady state. To prove the bug, I ended up writing what looked like a conference paper – formulae, graphs, error charts, etc.

4. Job descriptions for testers will want expertise in mathematics, hardware and/or DevOps. Given the scarcity of such people, we think the current market situation will force developers to become better testers. Put another way, the line dividing testers and developers is going to blur.

5. There will be very few pure ‘automation testers’ and pure ‘manual testers’. Most of our work will not be automated but we will be using a lot of tools. Tools will help you see further just like they do today. Given that most tools today cannot handle the testing challenges of the future, you will see an industry develop around testing tools.

6. Testing teams will purchase data. Good structured data is going to be critical in testing products. We think organizations will end up paying other companies to create and structure data. We also think testers will have access to data analysts who exist solely to help the testing team.

7. There will be a large market for simulation tools. We have already seen this in RF and electronics. We imagine every module of MATLAB being independent products on their own.

8. We will see the need for re-training a large number of experienced testing professionals. You will see training emerge (or re-emerge?) as a thriving business simply because it will make economic sense. Companies will find it worth their time and money (we hope!) to retrain their existing talent.

9. Testers will be reading (and probably co-authoring) highly technical journal papers. Yeah! There is going to be a lot of new ideas. There is also going to be more overlap between what is produced by academia and what is needed to test complex algorithms and products. Totally bragging: In the past, as part of work, I’ve read papers on G/G/n queues, worked on analytical methods to solve heterogenous fork/join queues (M/M/1 only), read papers on TCP, etc.

We will be exploring the three big challenges we have identified in this post. To learn more, stay tuned for our next few posts on our R&D efforts.

Related posts

- Where is Qxf2 headed?

- The need for change (at Qxf2)

- An introduction to R&D at Qxf2

- An introduction to training at Qxf2

- An introduction to hiring and onboarding at Qxf2

- Experimenting with team structures at Qxf2

I want to find out what conditions produce remarkable software. A few years ago, I chose to work as the first professional tester at a startup. I successfully won credibility for testers and established a world-class team. I have lead the testing for early versions of multiple products. Today, I run Qxf2 Services. Qxf2 provides software testing services for startups. If you are interested in what Qxf2 offers or simply want to talk about testing, you can contact me at: [email protected]. I like testing, math, chess and dogs.