I used Dask and Coiled to make some Python code I had written run in parallel. In this blog, I have described the approach used for implementing parallelism using Dask running on a coiled cluster.

I had developed a Python program to generate control heatmap images for chess games. A control heatmap (a term I made up!) shows how many pieces of a certain colour attack a given square on the chessboard. As you can imagine calculating this data for 64 squares on each move of every game and then generating gifs for each game is a computationally intensive task. Executing the program on my local setup (I tried!) would take an unreasonably long time. So, I switched to using Dask and then since Qxf2 had stumbled upon Coiled recently, I decided to use them as well.

What is Dask and Coiled?

Dask is a library for distributed computing in Python. It is a dynamic task scheduler and can process large sets of data in parallel by breaking it down and distributing it between multiple machines. Dask Collections create task graphs that define how to perform the computation in parallel. Coiled is a deployment-as-a-service library that makes it easy to scale dask on Cloud. It provides hosted dask clusters where the actual computation is performed. At the time of this writing, Coiled is in the Beta version and is free to use.

About the project

Heatmap is a way of representing data in two-dimensional form. For this project, I wanted to show how much control each color has on a square for each ply (a ply is ‘half a move’ in chess) in the chessboard. Chess games need to be provided as input in form of PGN files. An animated GIF is generated per game which captures the heatmap, with each ply(in that game) as a frame in the GIF. Following is an example:

The above heatmap was generated using the game Donald Byrne vs. Robert James Fischer, 1956. This game is known as the ‘Game of the Century’ and was played by a 13-year old Fischer.

Breakdown

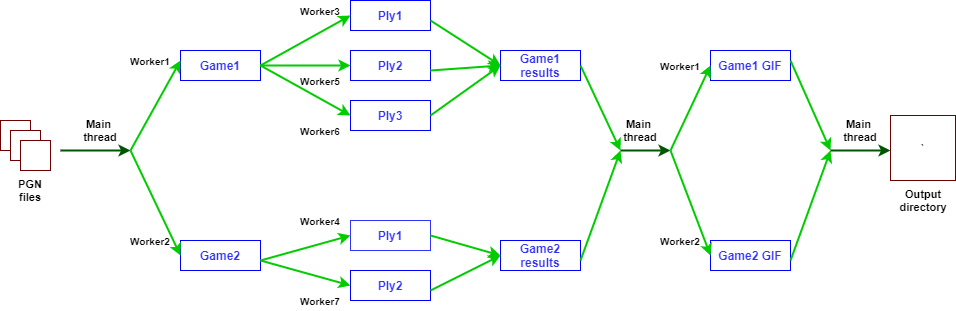

The flow of the project looks something like this:

-

1. Parse the PGN files and get the game objects

2. Divide games into batches

3. Process the games in parallel

4. Submit tasks from worker to handle plies

5. Calculate control of each square

6. Create animated gif

There is too much code to fit within a blog post. I have provided relevant snippets here. For the rest, you can follow along by cloning this repo Qxf2 Chess Heatmap Package.

1. Parse the PGN files and get the game objects

The Portable Game Notation (PGN) represents chess games. I used python-chess library which has in-built methods for reading games from PGN, getting number of attackers etc. The PGN files need to be present in the resources/input directory. Each PGN can contain multiple games which are parsed and a list of Game objects is created.

Refer get_games_from_pgn_files() method from chess_util.py file in the repo.

2. Divide the games into batches

To optimize the processing of a large number of games, I divided them into batches (the number of games per batch is configurable). Batching is required to avoid overloading the scheduler with a large number of tasks. See Dask Best practices for more details.

batch = [] for game in game_master_list: batch.append(game) if index % self.config_values["game_batch_size"] == 0: [more code] |

game_master_list contains the list of all game objects. game_batch_size is configurable and can be passed through config file.

Refer analyse_games_in_cluster() method from chess_dask_cluster.py file in the repo.

3. Process the games in parallel

I divided the game into smaller tasks – one task for each ply. For this, I start with an empty board, apply a ply and create a sub task. Creating sub-tasks is done in parallel for each game, this is the first level of parallelism. I used Dask Futures, one of the Dask collections for performing the parallel computations. The list of game objects is sent to the scheduler which assigns a worker for each game. I used client.map() to submit these tasks and collect the results by calling client.gather() method.

game_futures = self.client.map(ChessDaskCluster.analyse_game_in_worker, batch) all_game_results.extend(self.client.gather(game_futures)) |

all_game_results will have the results(calculated control power) returned from the futures for all the games.

Refer analyse_games_in_cluster() method from chess_dask_cluster.py file in the repo.

4. Submit tasks from worker to handle plies

The sub-tasks that are created in the above step are again sent to the scheduler for getting processed in parallel – this is the second level of parallelism. To do that from a worker, I used get_client which connects to the same scheduler to which the worker is connected. And apply the process (similar to Dask Client) for submitting the tasks.

worker_client = get_client() task_futures = worker_client.map(ChessUtil.find_control_for_square, tasks_for_game["game_tasks"]) game_data = None try: wait(task_futures, timeout) control_list_for_game = worker_client.gather(task_futures) [more code] except TimeoutError: print("Game timed out: " + str(game_no)) |

Here, each game is processed by a worker. The worker submits tasks to calculate heatmap frame for each ply in the game. The results are gathered into control_list_for_game.

Refer analyse_game_in_worker() method from chess_dask_cluster.py file in the repo.

5. Calculate control of each square for all the squares in a ply

My algorithm to calculate how many pieces could control (or defend) a particular square is:

-

-Use board.attackers method from chess library and get the list of attackers

-Loop through the attackers and remove those from the board

-Get the list of attackers again till the list of attackers is empty

-Also handled a couple of corner cases where King is an attacker. In that case, need to remove him at the end (since a board without a King is considered to be in an invalid state)

The above is done for all the squares in a ply, for each player side(color). With this, the heatmap for a single ply in a game is completed. The same process is done in parallel for all the plies in the game. All the games themselves are also processed in parallel.

Refer find_control_for_square_for_color() method from chess_util.py file present in the repo.

6. Create animated gif

After getting the heatmap data for each ply, to represent it graphically I used Seaborn Python library. This will create an animated GIF for each game. I implemented parallelism here too. As and when the heatmap data for a game is completed, a task is created with this data to generate the GIF. This is done using as_completed which returns the future which got completed. I used this because it will not be necessary to wait till all the games are completed to start GIF creation.

image_futures = self.client.map(ChessImageGenerator.create_gif, all_game_results) for image_future in as_completed(image_futures): image_data = image_future.result() |

Refer analyse_games_in_cluster() method from chess_dask_cluster.py file in the repo.

That is, in a nutshell, the working of this project. I made several changes/optimizations to the approach used from the time I started working on it, and there is room for more. As and when I see a possibility, will try to incorporate more. Your suggestions on this are welcome too!

Detailed instructions on how to run this project is available at GitHub: https://github.com/qxf2/chess-heatmap

My thoughts after trying Coiled

Using a dask cluster on Coiled made the handling of tasks efficient and faster. The main advantages of using Coiled were:

-

* Spin up a cluster with desired config pretty fast

* Easily create software environment with required dependencies

* Scale out large computations quickly

* Convenient to switch between Dask(local cluster) and Coiled(Cloud)

* The dask dashboard is easy to use and observe the progress of tasks and other stats

It was interesting to explore Coiled. When I ran the project for 1000 games, it took approximately 2 minutes to process all the 1000 games. The gifs took longer (~20 minutes) but I have not tried to optimize that part yet. On my local machine, the same program took 200 mins for around 250 games at which point I was forced to stop it.

Dask is very useful for scalability in Python data science. And by using Coiled the accessibility to computing is increased for everyone. Try it out while it’s still in Beta!

References:

Dask Futures – https://docs.dask.org/en/latest/futures.html

Getting started with Coiled – https://docs.coiled.io/user_guide/getting_started.html

python-chess – https://python-chess.readthedocs.io/en/latest/

I have been in the IT industry from 9 years. I worked as a curriculum validation engineer at Oracle for the past 5 years validating various courses on products developed by them. Before Oracle, I worked at TCS as a Manual tester. I like testing – its diverse, challenging, and satisfying in the sense that we can help improve the quality of software and provide better user experience. I also wanted to try my hand at writing and got an opportunity at Qxf2 as a Content Writer before transitioning to a full time QA Engineer role. I love doing DIY crafts, reading books and spending time with my daughter.