We recently discovered the concept of Natural Language Generation. It felt like a nice challenge for testing. We researched around and found that there were not many guides on how to test software used for Natural Language Generation. We decided to take advantage of Cunningham’s Law and post our thoughts on how to test natural language generators.

NOTE: To make this post useful, we developed a simple Python Flask application that performed rudimentary natural language generation.

Why this post?

One of the biggest challenges in a Natural Language Generation(NLG) system is testing (QA). NLG is the mapping from non-Natural language representations of information to Natural Language strings that express the information. Good evaluation is the key to the progress of NLG systems. But how do we make sure that an NLG system is robust and accurate? Is the generated text accurate, readable, and useful? How to measure the quality of the generated texts? These questions need to be taken care by QA. This post gives an overview on testing of a simple NLG application (which turns pieces of information into human-readable language) from a quality assurance and user perspective. We designed a lot of synthetic data for testing this system and ran a bunch of test cases and report what test cases the system got right. This report allows us to draw more general conclusions like testing NLG is no less than testing any web application – you still need to think of the end user. Rather than using the comparable techniques to perform comparable tasks we just used sets of paired input and output. Our evaluation is task-specific and we customized it to suit our goals.

QA challenges for NLG evaluation

Testing NLG systems is not an easy process since not many special tools and techniques for NLG testing are developed. Since NLG spans a wide range of sub-problems such as aggregation, lexicalization, realization etc., testing all the data variations and test behaviors at different levels and finding bugs is a challenge. How we design our unit and system test to check the output variations at each level is vital. Testing an NLG application can be similar to testing any other web application. The evaluation criteria are general to all software systems and of course, also apply to evaluating NLG systems. It can be a direct evaluation of outputs or indirect evaluation by comparison against a set of reference texts. Outputs have to be natural, fluent and communicate relevant information. We can add unit tests at module level or functional tests to test the functionality.

General NLG-specific evaluation techniques should assess the three mentioned criteria

(i) language quality

(ii) appropriateness of content

(iii) task-effectiveness, or how well the generated texts achieve their communicative purpose.

The ability to draw conclusions from observing trends in data is a key skill. We agree that NLG is too diverse and there are no mutually accepted evaluation metrics. In this article, we made an attempt and focused only on human testing with no comparative reference texts. We listed out multiple scenarios and test cases that we ran on a simple NLG application with a simple input and output.

About our NLG Application under test

We have created a sample web application called “Hospitals near me” which take a 5-digit zip code as input and lets you find out highest overall rated hospitals within a 20-mile radius of the given zip code. This currently accepts zip codes from the USA only. This NLG application is based on US hospital data and it generates a verbal summary based on the changing data along with a tabular data about hospitals within a certain range. The system is designed to do the following:

a) Determine opening lines in the summary.

b) Return a verbal summary for the hospitals provided.

c) If there are more than two choices, it will also show tabular data.

d) If the highest rated option is not the closest, it will mention that fact.

e) Make a lexical choice (Highest, high, etc.) regarding the overall score.

In the below sections we will be discussing testing each of these components and verify the results.

Understanding the Test Data

This sample test data HospInfo.csv is taken from Kaggle for our internal practice project. This data file contains general information about all US hospitals that have been registered with Medicare, including their addresses, type of hospital, and ownership structure. It also contains information about the quality of each hospital, in the form of an overall rating (1-5, where 5 is the best possible rating & 1 is the worst). You can find the code and the data on our Github Repo. The README.md has setup instructions and some examples.

Testing NLG application with different Use cases

Data summaries are not static, and there’s a constant need to update and improve them. There are many possible scenarios. As a QA, we need to test with many data points, for example, we might want to add new data points or test scenarios when there is a change in the angle of the story. Developers have to design the system in such a way that testers testing any enhancements or changes can simply edit a config file and use synthetic data as input and get an output. We asked our developer to change the code in such a way that we could simply edit a config file and use synthetic data. Identifying, analyzing and testing these changes requires a significant time and test data quality plays a significant role. As testers and data designers, we have just started experiments testing NLG applications. The main goal of our experiment is to manually verify the quality of text summary generated for each task.

Instead of processing the entire data set we are choosing the interesting elements from the data set to talk about. We have created a bunch of CSVs and introduced different artificial errors in each file like missing data, giving out of range values, giving long values, verifying different ratings etc., for testing different scenarios and evaluate the system and its behavior.(Note:- All the Issues raised during testing this sample NLG application can be found here)

1. Evaluating for Missing Data

Handling missing data is important as many machine learning algorithms do not support data with missing values. NLG systems too should check for missing values and should inform if calculations are performed on data with missing values. Summary based on incomplete data can produce low quality or inaccurate results.

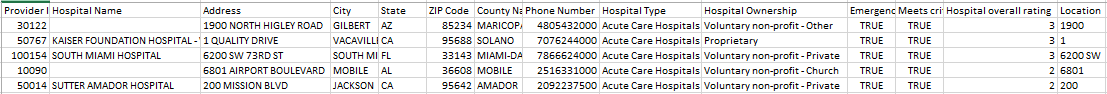

Missing Hospital Name – Firstly, from the HospInfo.csv data file we selected a block of data and created a new CSV file(Figure 1)and introduced a couple of missing values in that file to check if the missing values in the data are handled or not. The hospital names for some of the zip codes(36608, 85234) are left blank in the CSV file. When we run the application with the blank values in hospital names the system should address this issue and text with missing data should be highlighted as having missing data by adding phrases such as “hospital data not available” or “hospital name is not found or missing”. This ensures the reader of the text is aware that not all data was available when generating this text. In our scenario, this problem has not been systematically addressed in our NLG application. We noticed that the generated output text has ‘NaN’ values in place of missing data. Hence we raised an issue on GitHub for this.

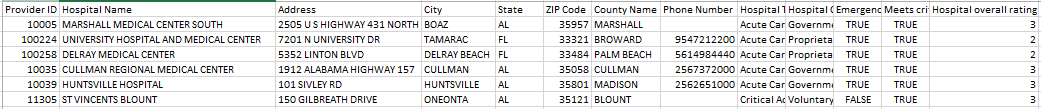

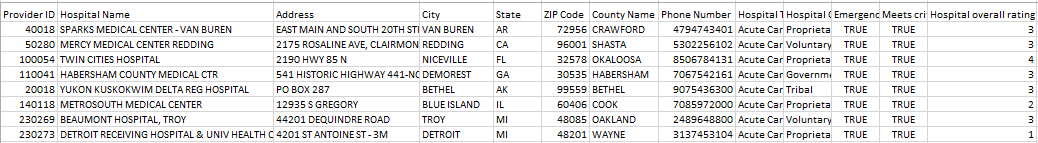

Missing Phone Number – We regularly encounter empty fields in data records either because it exists and was not collected or it never existed. To check if this kind of issue is handled or not we created a tiny dataset(Figure 2) with few records and introduced some missing values in the phone numbers for the zip codes(35957, 35121). Ideally, the algorithm should replace the missing phone numbers with text messages having phrases such as “Phone numbers not available for this hospital”. The system did not address this validation and we noticed that the generated output text has ‘NaN’ values in place of missing data. Hence we raised an issue.

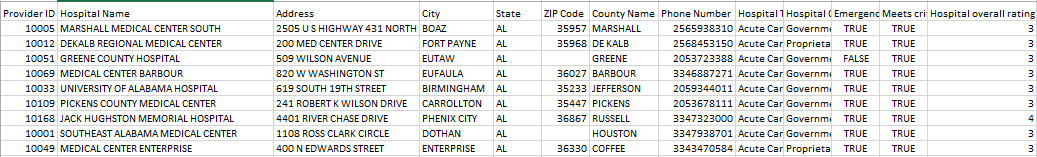

Verify Empty zip code – Later, we created a CSV file with about 10 records with empty zip code values in some of the records(Figure 3). For example:- The zip code 35462 is not available in the CSV file created, so when the user inputs this zip code, the expected output should be a summary expressed in fluent way with phrases like “There are no hospitals within a 20-mile radius of your zip code.” so that the user understands it clearly. Since the expected output is matching with the actual output here, we passed this test case.

In short, the system is not addressing the missing values in the data for some use cases. This may result in generating low-quality text summaries.

2. Validating the summary for correctness

As testers, we need to make sure that the generated summaries accurately reflect the true nature of the underlying data and do not make any misleading statements. This is especially important from an NLG perspective because, with large datasets, it may be impossible to read every generation and reasonable-sounding, but misleading, generations may slip through without proper validation. As users read the automatically generated summaries, any misleading information can affect their subsequent actions, having a real-world impact. Let us look at below scenarios.

Verify distance values – Distance between zips should be calculated and nearest zips must be returned for the given zip code. For example, we have created a CSV file with few zip codes(Figure 4) for this use case. For the zip code 35957, the summary generated is showing as “0.00 miles away“, which means the distance between the two hospitals is less than a mile. If this was a human-written summary, it would never talk this way. Instead, we would see summaries like “The hospital is less than a mile away…” or “The hospital is within a mile”. But if you look at the system generated summary it returns as “MARSHALL MEDICAL CENTER SOUTH is 0.00 miles away..” Even though 0.00 is a valid value, in this case, using it may be ambiguous. Instead, we can have phrases like ” The MARSHALL MEDICAL CENTER SOUTH hospital is less than a mile away…”. From a user perspective, this information is not sufficient and is not a good way of telling a story about the data. We raised an issue for this too.

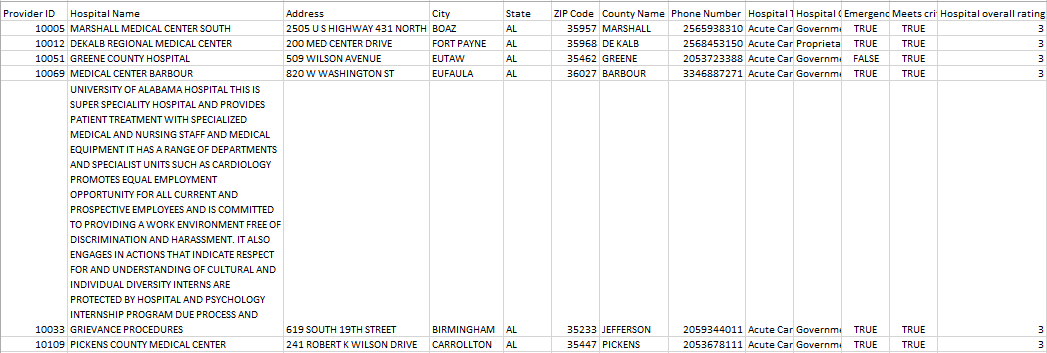

Check ‘Hospital Name’ too long value – It is very common that we have long strings of text but we will be interested only in certain part of data. One more data validation which we wanted to check is by giving long values in the ‘Hospital Name’ field. We created a CSV file(Figure 5) with few records with the ‘Hospital Name’ field having about 600 characters for zip code 35233. When we run the application with this zip code, the expected output is to either truncate the column names automatically and limit the column width when they exceed the maximum length (let’s say first 100 characters) or check the maximum length and just raise an exception saying “Hospital name too long”. In our case, the application neither truncated nor raised any exception about the column length hence raised an issue for this.

3. Verify opening lines in the summary

The summary should be as short and concise as possible. The opening lines are very important and can be tricky to write. To test the opening lines with different messages we created different blocks of synthetic data as described below.

Check zip code with no nearby hospitals – Firstly, we created a CSV file(Figure 6) with around 9 records out of which few zip codes didn’t have any hospitals within a 20-mile radius. For example zip code 99559 does not have any nearby hospitals. The expected output for such scenario is the summary generated should clearly state that “There are no hospitals within a 20-mile radius of your zip code”. The system generated summary gave us exactly the same results and hence this test case is passed.

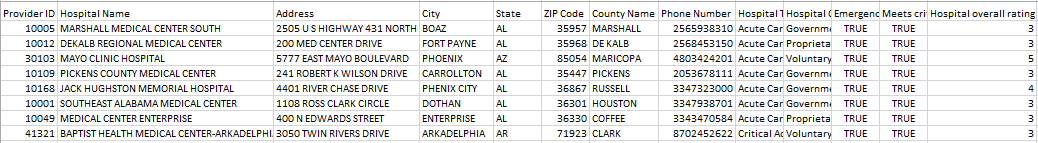

Check zip code with exactly one hospital – Secondly, as part of data validation testing, we created a CSV file(Figure 7) with some data which has few zip codes with exactly one hospital within the range. For example for the zip code 71923, there is only one hospital that is nearby. This check is to verify if the system generated summary would clearly state that the user has only one option to select and list out that option. The system generated the text summary stating that “You have only one hospital within a 20-mile radius of your zip code’ which seems to be matching with the expected output and hence this test case is passed.

Check multiple hospitals when the highest rated hospital is the closest hospital – There may be scenarios where the highest rated hospital may be the closest hospital. The text summary should give a clear context of this. To verify this we created a CSV file(Figure 8) which has few zip codes with multiple hospitals within the range. For example for the zip code 72118, there are three hospitals within the range. The highest rated hospital is also the closest hospital for this zip code. In this scenario, the expected summary should have phrases like “You have more than one option near you. Consider one of the following…” and list out the options available for the user to select. The output summary is as expected and hence this test case is passed.

Check multiple hospitals when the highest rated hospital is not the closest hospital – This is a case where the highest rated hospital is not the closest hospital and the text summary should clearly talk about this. For Eg: we created a sample dataset(Figure 9) with few zip codes in which for the zip code 85234 there are multiple options available for the user to select. The summary should mention the highest rated hospital details along with closest hospital details as shown here. “BANNER HEART HOSPITAL is the best-rated hospital near you … and BANNER GATEWAY MEDICAL CENTER is the closest to you..” and list out the options available for the user to select. The output summary is as expected and hence this test case is passed.

4. Verify the Lexical mappings

Verifying the problems that occur at the extremes or outside of normal data ranges or issues in the data, is a difficult task. For eg: In our case, the whole system is designed based on the hospital overall rating field. (Note: Some of the entries in this column is having ‘Not Available’ value. The algorithm replaced these values with ‘0’). Based on this field value(max rating is 5), the Lexical mapping(The choice of content words to appear in the final output text) is done accordingly in the code as shown below. The system is designed in such a way that the data is sorted based on the overall hospital rating and Distance(distance between the zips). Since users are more interested in knowing the nearest top-rated hospitals only the top three hospitals are reported in the summary. Based on the rating the keywords Highest’,’High’,’Medium’,’Low’,’Lowest’ is used in the summary. To check this scenario, we have created a bunch of CSVs with different hospital rating data.

self.lexical_mapping = {'5 overall rating': 'the highest overall rating', '4 overall rating': 'a high overall rating', '3 overall rating': 'a medium overall rating', '2 overall rating': 'a low overall rating', '1 overall rating': 'the lowest overall rating', 'has a 0 overall rating': 'does not have an available rating'} # Verbalize overall score |

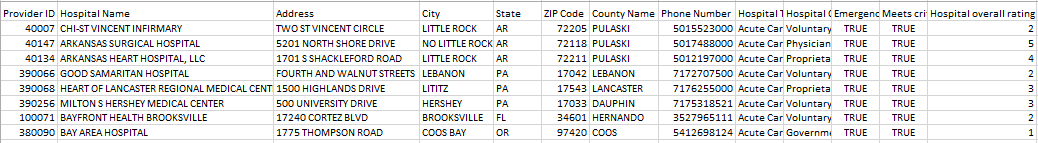

Check ‘the highest rating’ – Firstly, we created a test data(Figure 10) which has multiple zip codes with different hospital ratings. For eg: the zip code 72205 has the hospital overall rating of ‘5’ which is highest. This test is to check the lexical mapping for the highest scored hospital. The expected output summary should state something like “1. ARKANSAS SURGICAL HOSPITAL is 7.18 miles away and has the highest overall rating. Their listed phone number is 5017488000.” The system generated summary gave us exactly the same results and hence this test case is passed.

Check ‘the high rating’ – To check this scenario, in the above test data we have zip code 36867 which has a hospital overall rating as ‘4’. This test is to check the lexical mapping for the high scored hospital. The algorithm replaced it with text “WEDOWEE HOSPITAL is 0.00 miles away and has a high overall rating.” which is as expected.

Check ‘the medium rating’ – To check this scenario, we created a test data(Figure 11) in which a couple of zip codes are having hospital overall rating as ‘3’. For eg: the test data has a zip code of 17543 for which the algorithm replaced it with text “HEART OF LANCASTER REGIONAL MEDICAL CENTER is 10.31 miles away and has a medium overall rating. Their listed phone number is 7176255000.” which is as expected.

Check ‘the low rating’ – To check this scenario, we created a data with zip codes having hospital overall rating as ‘2’. For eg: the test data has a zip code of 34601 for which the algorithm replaced it with the text “BAYFRONT HEALTH BROOKSVILLE is 0.00 miles away and has a low overall rating. Their listed phone number is 3527965111.” which is as expected.

Check ‘the lowest rating’ – To check this scenario, we created a data with zip codes having hospital overall rating as ‘1’. For Eg: the test data has a zip code of 97420 for which the algorithm replaced it with the text “BAY AREA HOSPITAL is 0.00 miles away and has the lowest overall rating. Their listed phone number is 5412698124.” which is as expected.

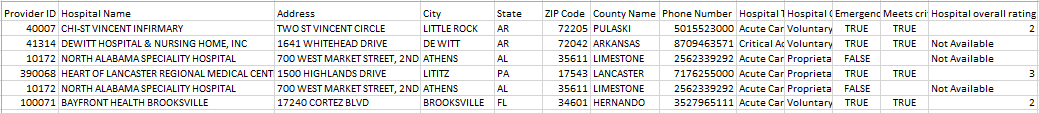

Check hospital unrated(0) – Since the algorithm is replacing the ‘Not Available’ values with ‘0’ we wanted to check the system behavior here. To check this scenario, we created a sample data(Figure 12) with some zip codes having hospital overall rating as ‘Not Available’. For eg: the test data has a zip code 72042 for which the rating is ‘Not Available’. The algorithm replaced it with text “DEWITT HOSPITAL & NURSING HOME, INC is 0.00 miles away and has 0 overall rating. Their listed phone number is 8709463571.” which is not as expected. The output summary should replace with the strings with human words. The lexical mapping should be mapped in such a way that it should report as “DEWITT HOSPITAL & NURSING HOME, INC is within a mile away and does not have an available rating”.

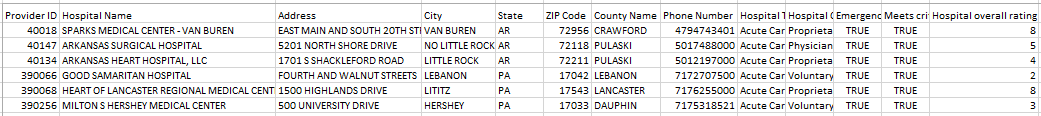

Check out of range for rating – To check this scenario, we created a sample dataset with around 6 records and introduced a new hospital overall rating ‘8’ in the test data. For example, for the zip code 72956(Figure 13) the rating is given as ‘8’ which is not defined in the code, it is common to report activity occurring between the range but the expected result here is to report the user that the data requested is not available or is ambiguous. This functionality is not checked in the code, the summary generated is “SPARKS MEDICAL CENTER – VAN BUREN is 0.00 miles away and has 8 overall rating. Their listed phone number is 4794743401.” Raised an issue for this.

In this way, we tested NLG web-application and raised a couple of defects. It’s important to first identify the output content and prioritize the use cases accordingly and test the efficiency of NLG application.

If you liked what you read, know more about Qxf2.

References

1)How do I Build an NLG System: Testing and Quality Assurance

2)Comparing Automatic and Human Evaluation of NLG Systems

3)Kaggle – Hospital general information

4)Generating Weather Forecast Texts with Case based Reasoning

5)Text Summarization in Python: Extractive vs. Abstractive techniques revisited

I am an experienced engineer who has worked with top IT firms in India, gaining valuable expertise in software development and testing. My journey in QA began at Dell, where I focused on the manufacturing domain. This experience provided me with a strong foundation in quality assurance practices and processes.

I joined Qxf2 in 2016, where I continued to refine my skills, enhancing my proficiency in Python. I also expanded my skill set to include JavaScript, gaining hands-on experience and even build frameworks from scratch using TestCafe. Throughout my journey at Qxf2, I have had the opportunity to work on diverse technologies and platforms which includes working on powerful data validation framework like Great Expectations, AI tools like Whisper AI, and developed expertise in various web scraping techniques. I recently started exploring Rust. I enjoy working with variety of tools and sharing my experiences through blogging.

My interests are vegetable gardening using organic methods, listening to music and reading books.

Hi Indira,

Very interesting post please can you also clarify if the validation of these test been done in a manual approach or automated way.

Regards,

Ashok

Ashok,

The validations were done using manual approach.