Many ML teams face challenges moving into production. Even when they succeed, models often fall short of customer expectations. For the few that do deploy a useful model, keeping it evolving and improving quickly becomes a struggle. Qxf2's AI/ML testing service is designed to solve these challenges.

If you haven't worked with technical testers before, you might expect such complex technical issues to be beyond the scope of QA. But it's possible, and we've done it. Drawing on our experience with ML projects, we've developed a six-dimensional approach to help your team maintain model effectiveness and reliability over time. We also enable your data scientists and ML engineers to focus on tasks that best fit their expertise.

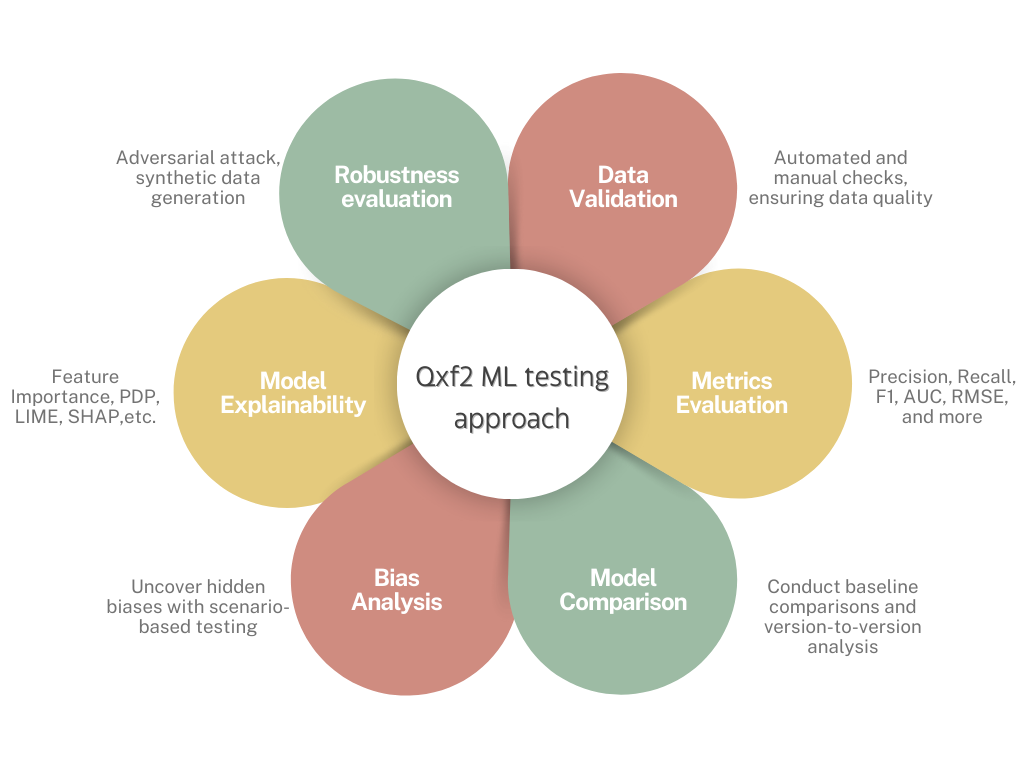

Your team (developers, data scientists) work with a QA engineer who is proficient in ML practices and has successfully managed multiple QA projects across various technologies. You benefit from data validation, insights into model decision-making, robustness evaluation against real-world scenarios, bias testing, metrics analysis, and intelligent model comparison. This approach will enhance your existing testing pipeline, accelerating model improvements and refinement.

There are many sources of error that can cause models to miss user expectations, leading to a wide range of testing needs. Over time, we've honed our approach to focus on these key areas. Not all of these will be applicable to your project, so we tailor our approach to meet your specific needs.

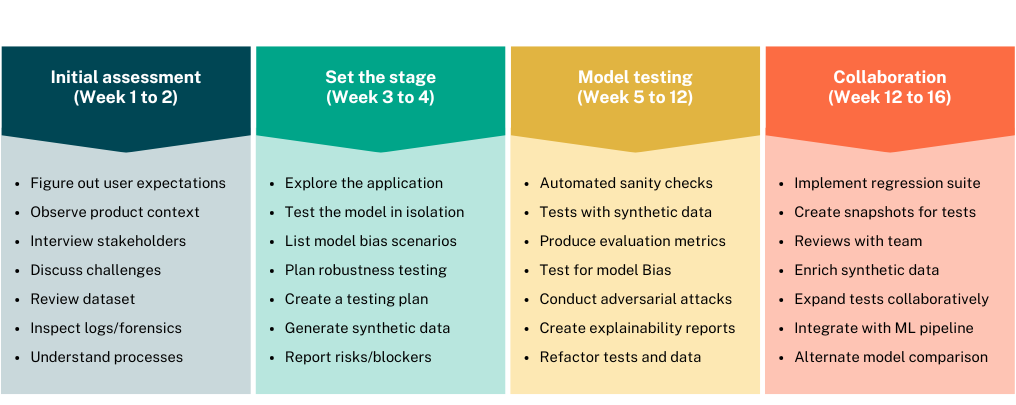

Our engagement process spans around 16 weeks, progressing through distinct phases tailored to your machine learning project. Each phase is designed to address specific aspects of model evaluation, from initial assessment to intensive testing, ensuring a comprehensive analysis and improvement of your model. Throughout, we work closely with you to refine and validate the model, ensuring it meets real-world requirements and maintains high performance over time.

Our approaches are based on both research and hands-on experience. We've implemented these techniques in our projects and research. You can read about our work and findings in our blog posts.

Qxf2's QA engineers are highly technical, allowing us to seamlessly integrate with startups that need both speed and expertise in their testing. We provide advanced QA services tailored to the evolving needs of early-stage products.

Want to help your ML team with this service? Write to Arun ([email protected]) or drop a note.

© Qxf2 Services 2013 -