Startups make several false starts when trying to establish effective testing. We have successfully established effective testing for many early stage products. And we have listed common steps that are likely to be effective at your startup too. Most of these steps are quick to implement and will bring in high returns and sustained results over time. We believe these steps apply to a large number of startups. But each startup has unique aspects to it. So treat this as a guide that should be over-ridden by testers with knowledge of your specific realities.

PS: We recommend you hire us. But if that is not an option for you, refer to our DIY guides at the end of each step.

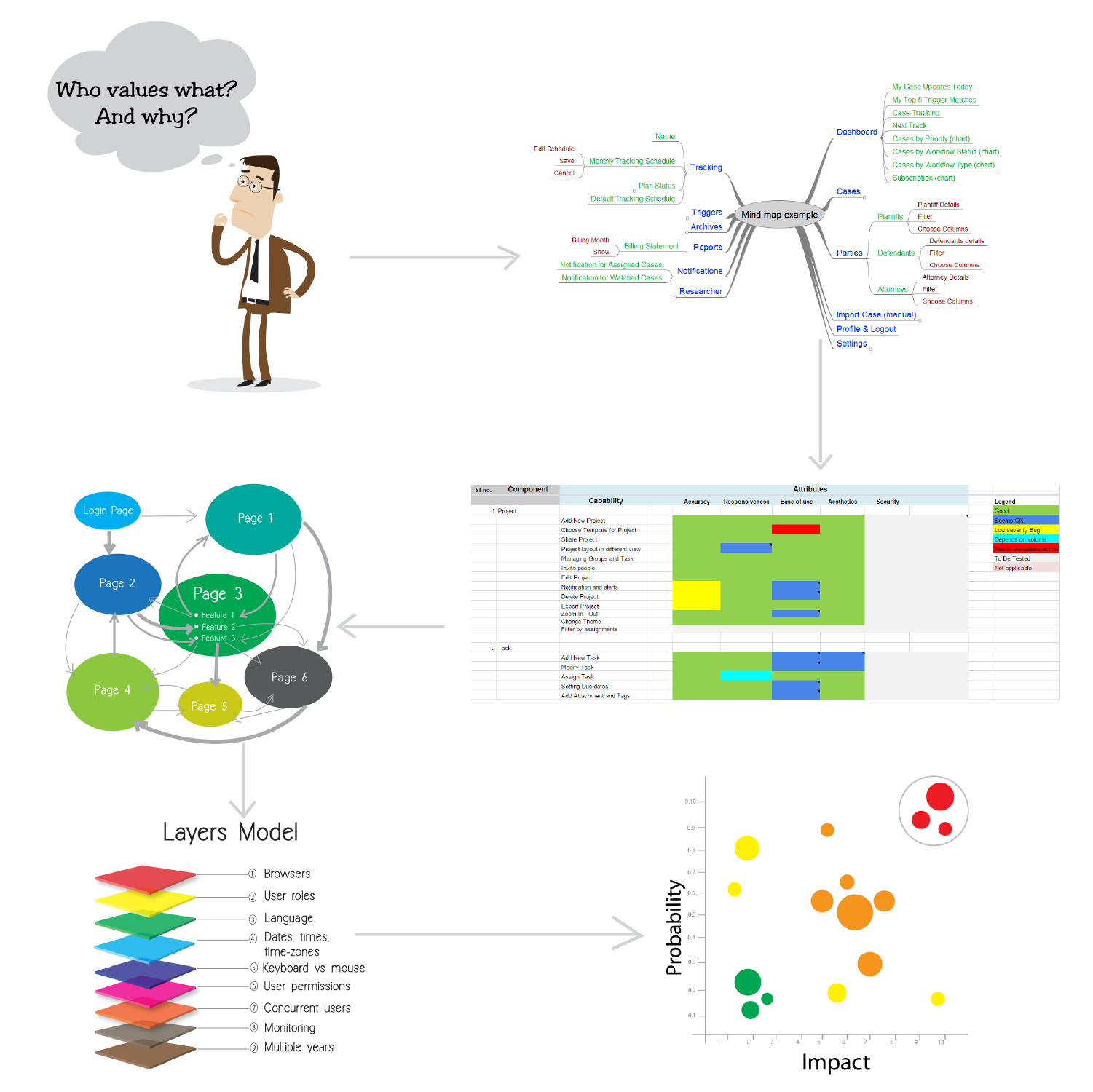

Deciding where to start testing is a daunting task. Especially when you have been trading testing cycles to fuel rapid development. Here is a technique that works well for us - even for products we barely know. We usually spend no more than 2 days on this step. At a high level, you need to figure out the people interacting with your product, what each of them care about and to what degree. Visualizing the product in several ways helps you identify suitable starting points. We use mind maps, ACC model, workflow maps, layers model and risk scatterplots. Do it yourself!

Ah! The QA tiers, the sand-boxes, demo, staging, et al. To start, we recommend keeping things simple. Have a tier that (almost) mimics your production tier. Make it accessible to all employees. Make regular deploys to the test-tier easy for everyone. For data heavy applications, consider copying production data onto the test-tier regularly. Once you have this going, you are on your way to spinning up test instances on demand!

The platforms/devices/browsers you use to test should reasonably mirror the habits of your user base. Buy folks mobile devices, set them up with emulators and virtual machines. Do it yourself!

Automate your acceptance criteria. Run it often. When you introduce automation, keep it light and simple. Do not go about building large frameworks before automating your acceptance tests. The key is to get into the habit of running tests regularly. We recommend starting with GUI automation - simply because they are visual and makes an impact on people who have never seen automated test runs before. But as you progress, rely more on API automation and where possible unit testing.

What language(s) you choose to implement automation depends on a few factors. Popular options are typically the language you use to develop the product or the language your test team is most familiar with. The language you use will affect the size of your hiring pool at later stages - so do not choose something too esoteric. We suggest picking a scripting language like Python or Ruby. Many test tools support them and they are easy to pick up. So testers with no prior automation experience can learn and contribute relatively quickly. We have written many blog posts to help you get started with GUI/API/Unit automation. Do it yourself!

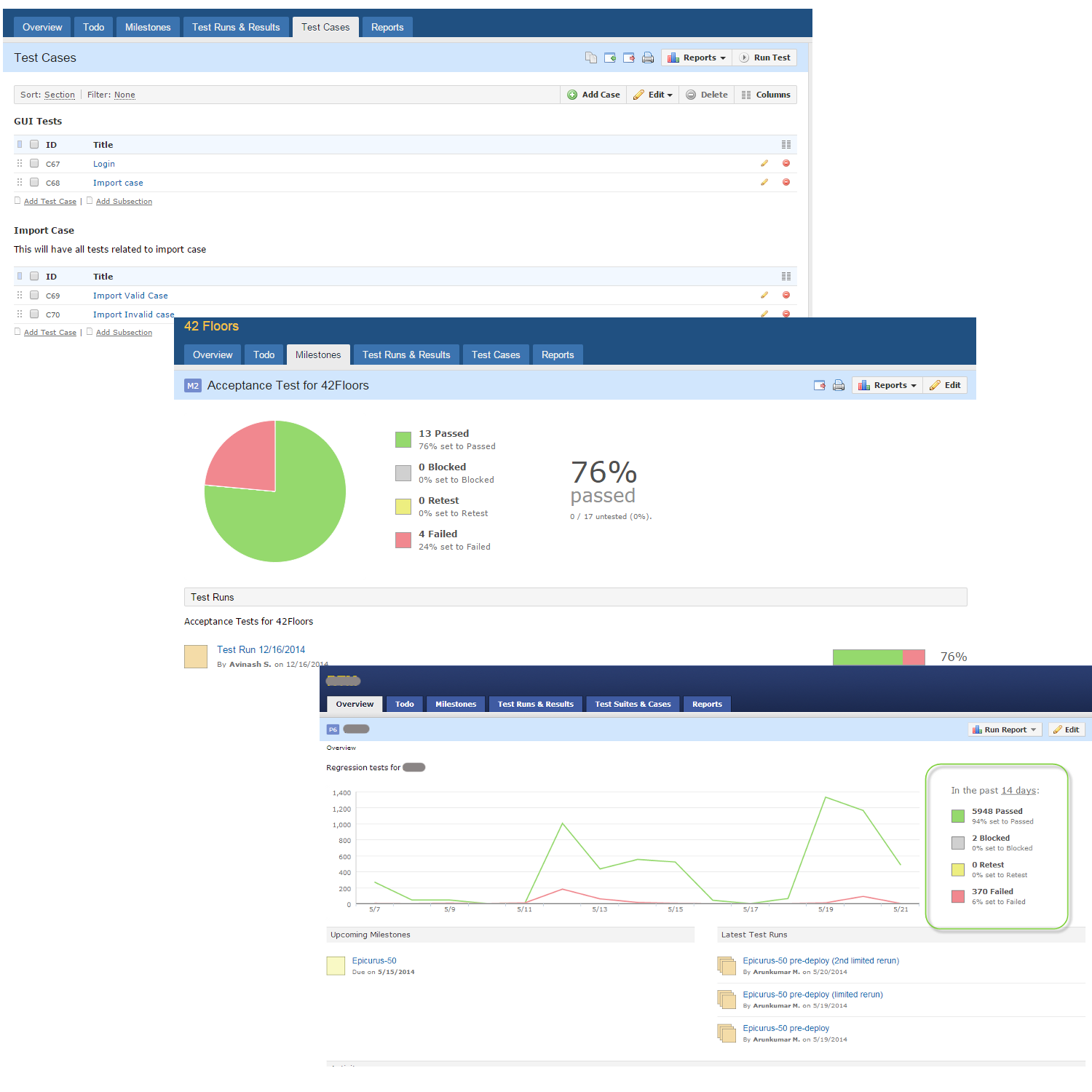

Store your test cases in a visual test case manager. Don't bother documenting cases in Word and then muddling with Excel macros to collect and report test results. Pay for a mature and visual test case manager. They are relatively cheap: ~ $25 per tester per month. We are fans of both TestRail and PractiTest. They will do nicely and scale very well. One of our clients went through a technical audit as part of their Series A funding round. Our use of TestRail made a very good impression on the auditors.

Do NOT go overboard documenting test cases. At this stage, simply have one line describing the test case. You can flesh out the test cases when you learn more about the product. Have one section for the acceptance suite. Enhance your automation (its super easy) to report directly to your test case manager. Run acceptance tests regularly and share the reports with your colleagues. Make it a habit to keep notes on milestones and test runs within the test case manager. It will really help as you scale. Do it yourself!

At this stage, you have put up some minimal automation for acceptance criteria, demo-ed it to your colleagues, run the automation often and gotten it to report to your test case management tool. A logical next step is to let anyone in the company run it. Configure a job in your CI server to allow everyone to kick off an automation run. Implementing this step early will not only help you get feedback quickly but will also clearly flag undesirable trends like your GUI automation becoming flakey, tests taking too long to run, etc. Like every other step in this guide, you can expect high and sustained returns from a very low time investment. Do it yourself!

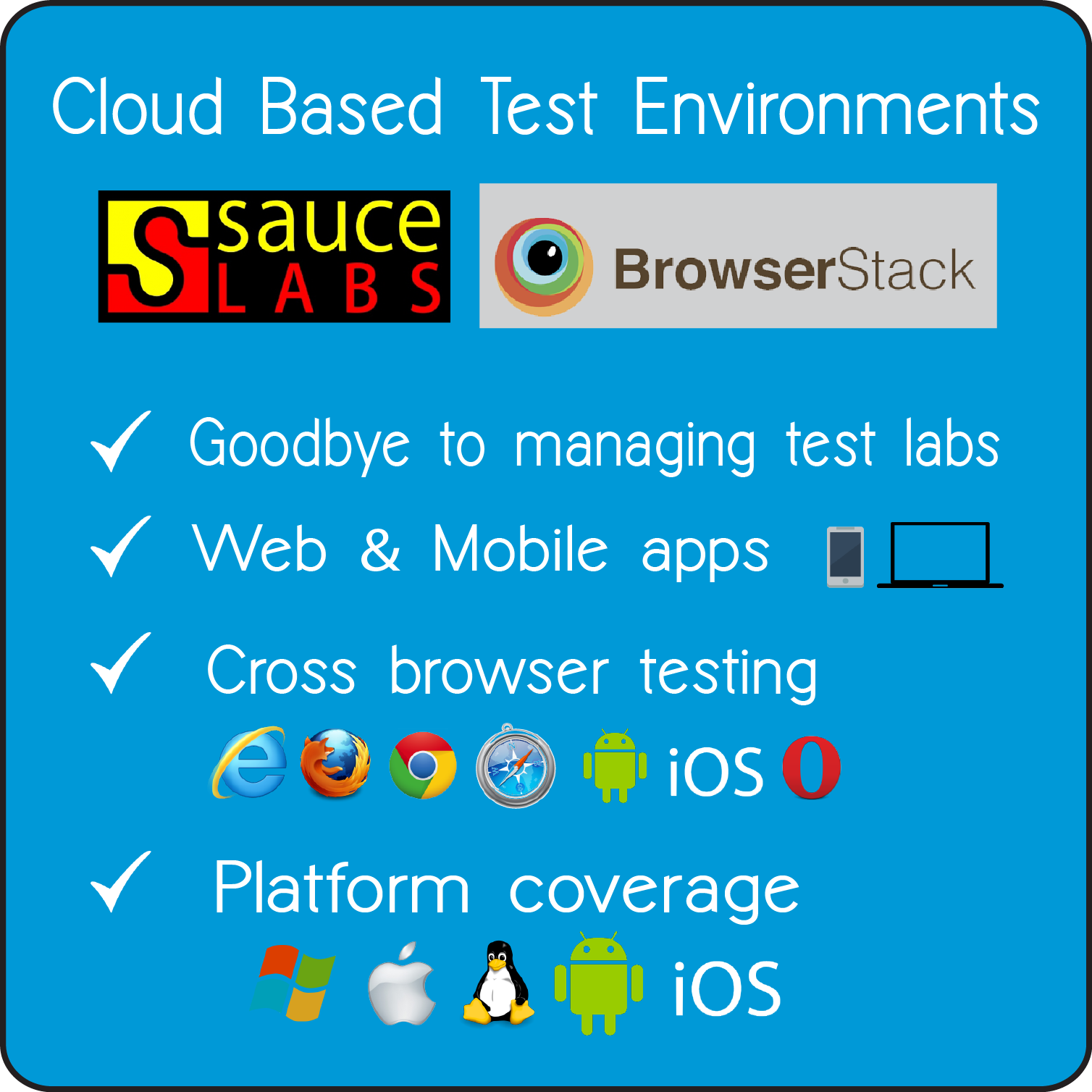

This is another time saving step that scales incredibly well for the time and money that you invest on it. Give your employees ready access to cloud based platforms for cross browser testing as well as testing across OSes and devices. At Qxf2, we love both Sauce Labs and BrowserStack. Both are easy to use and have an excellent selection of browsers, devices and operating systems. Pay for both the 'manual' (~$20/month) and 'automation' (~$50/month) plans. They will save you a lot of time!

Hook up your automation to run on the cloud. Its literally like a dozen lines of code! For your most probable use case (of browser/device/OS), ensure everyone has VMs with them. If you are primarily an Apple shop and a vast majority of your user base is on Windows, we recommend using Modern IE. Do it yourself!

We are listing this point here because we have heard questions about this from every prospect of ours. The keys are to communicate clearly, be responsible and hit a regular rhythm for deploys. Before deploys: test and close the changes in the build. Then run whatever little automation you have. Next, perform some exploratory testing. If you find issues at any step, announce it to the team and inform your stakeholders. When you are satisfied, explicitly sign off on some common broadcast channel.

Discuss deploy timing with your group - remember to include your support staff. If your product has not received testing love for a long time, then its likely that the first few deploys will contain some nasty surprise which will cause your support staff to firefight. So involve them in the discussion. Post deploy, do some spot checks and again explicitly announce that you think the deploy looks good. For larger companies, this sort of process is not very useful - they could automate the entire process away. But for startups - be present! Having a human explicitly take responsibility for something builds trust and calms down frayed nerves. Patch processes are similar except that you should conduct a post-mortem and decide how to prevent the same bug from recurring.

This tip is for startups that are valiantly trying to manage their own testing without hiring professional testers. Chances are high that you find people catch bugs that directly contradict the explicit requirements. However you are probably dealing with the pain of implicit requirements being missed as well as regressions in related workflows. When questioned, your employees simply say "It wasn't in the the requirements!". This is a classic symptom of misunderstanding testing to be an activity that closely follows a definite/certain script. You are treating testing like 'checking'. So here is an analogy to fight the misconception

These eight steps lay a solid foundation for testing at your startup. They will remove barriers and amplify the effect of good testing. Of course, you still need to test well. If you need help, you can always contact us. Good luck!

© Qxf2 Services 2013 -